by Michael A. Caulfield

.

.

.

.

.

.

Media Attributions

- 88×31

by Michael A. Caulfield

.

.

.

.

.

.

Web Literacy for Student Fact Checkers by Mike Caulfield is licensed under a Creative Commons Attribution 4.0 International License, except where otherwise noted.

Cover image: “building-blocks-colorful-build-456616” by Counselling. Pixabay License.

1

Sincere thanks to

Jon Udell, who introduced to me the idea of web strategies;

Ward Cunningham, who taught me the culture of wiki;

Sam Wineburg, whose encouragement and guidance helped me focus on the bits that mattered;

AASCU’s American Democracy Project, which believed in this work;

and most importantly, my wife and family who tolerate the coffee shop weekends from which all great (and even mediocre) books are made.

I

1

The web is a unique terrain, substantially different from print materials. Too often, attempts at teaching information literacy for the web do not take into account both the web’s unique challenges and its unique affordances.

Much web literacy I’ve seen either asks students to look at web pages and think about them, or teaches them to publish and produce things on the web. While both of these activities are valuable, neither addresses a set of real problems students confront daily: evaluating the information that reaches them through their social media streams. For these daily tasks, students need concrete strategies and tactics for tracing claims to sources and for analyzing the nature and reliability of those sources.

The web gives us many such strategies, tactics, and tools, which, properly used, can get students closer to the truth of a statement or image within seconds. Unfortunately, we do not teach students these specific techniques. As many people have noted, the web is both the largest propaganda machine ever created and the most amazing fact-checking tool ever invented. But if we haven’t taught our students those fact-checking capabilities, is it any surprise that propaganda is winning?

This is an unabashedly practical guide for the student fact-checker. It supplements generic information literacy with the specific web-based techniques that can get you closer to the truth on the web more quickly.

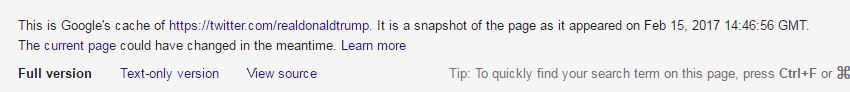

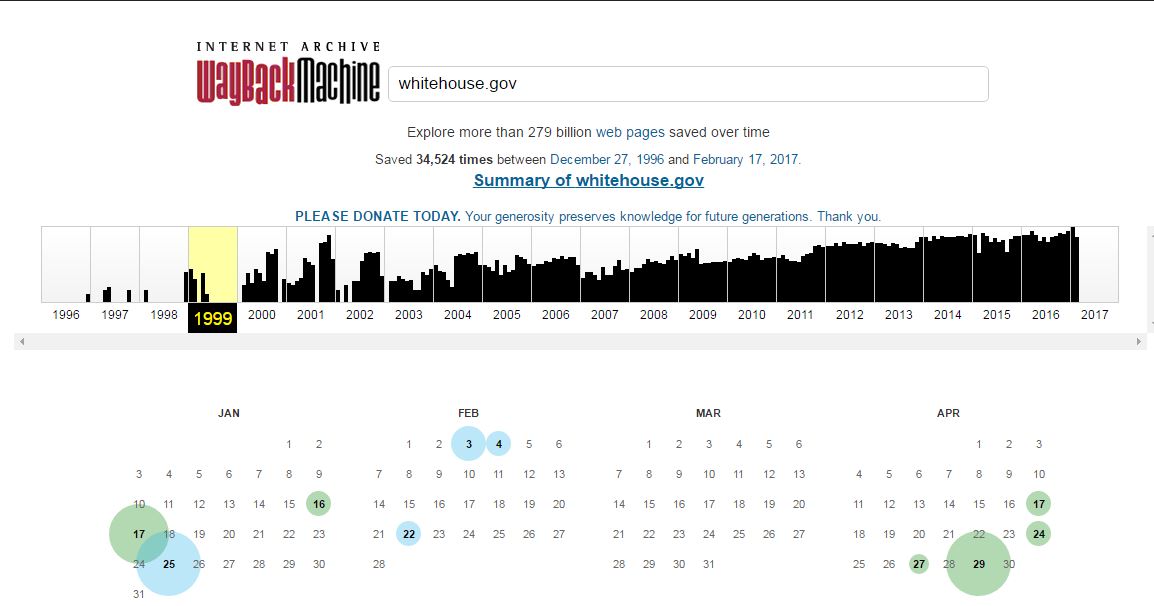

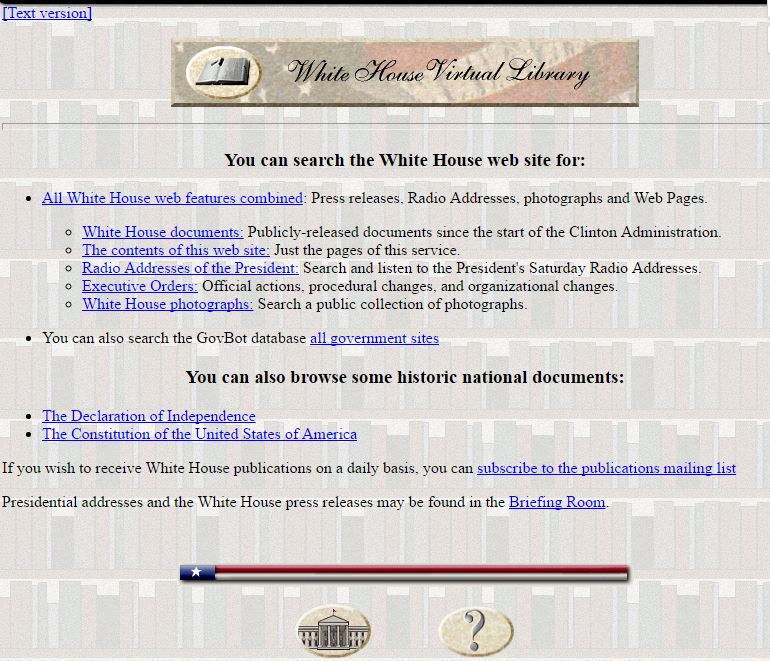

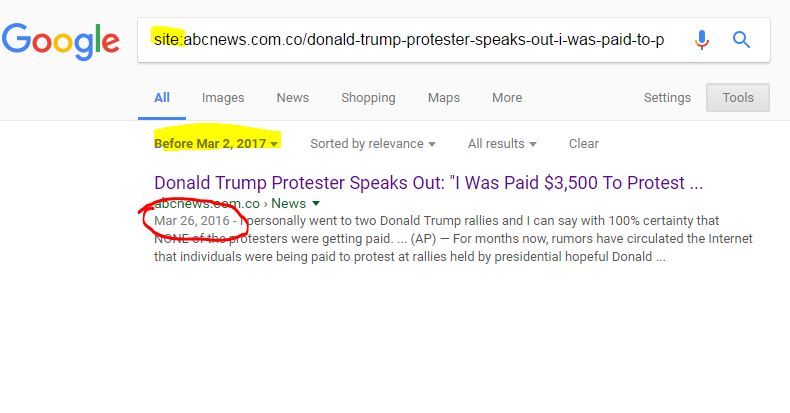

This guide will show you how to use date filters to find the source of viral content, how to assess the reputation of a scientific journal in less than five seconds, and how to see if a tweet is really from the famous person you think it is or from an impostor. It’ll show you how to find pages that have been deleted, figure out who paid for the website you’re looking at, and whether the weather portrayed in that viral video actual matches the weather in that location on that day. It’ll show you how to check a Wikipedia page for recent vandalism and how to search the text of almost any printed book to verify a quote. It’ll teach you to parse URLs and scan search result blurbs so that you are more likely to get to the right result on the first click. And it’ll show you how to avoid baking confirmation bias into your search terms.

In other words, this guide will help you become “web literate” by showing you the unique opportunities and pitfalls of searching for truth on the web. Crazy, right?

This is the instruction manual to reading on the modern internet. I hope you find it useful.

2

What people need most when confronted with a claim that may not be 100% true is things they can do to get closer to the truth. They need something I have decided to call “moves.”

Moves accomplish intermediate goals in the fact-checking process. They are associated with specific tactics. Here are the four moves this guide will hinge on:

In general, you can try these moves in sequence. If you find success at any stage, your work might be done.

When you encounter a claim you want to check, your first move might be to see if sites like Politifact, or Snopes, or even Wikipedia have researched the claim (Check for previous work).

If you can’t find previous work on the claim, start by trying to trace the claim to the source. If the claim is about research, try to find the journal it appeared in. If the claim is about an event, try to find the news publication in which it was originally reported (Go upstream).

Maybe you get lucky and the source is something known to be reputable, such as the journal Science or the newspaper the New York Times. Again, if so, you can stop there. If not, you’re going to need to read laterally, finding out more about this source you’ve ended up at and asking whether it is trustworthy (Read laterally).

And if at any point you fail–if the source you find is not trustworthy, complex questions emerge, or the claim turns out to have multiple sub-claims–then you circle back, and start a new process. Rewrite the claim. Try a new search of fact-checking sites, or find an alternate source (Circle back).

3

In addition to the moves, I’ll introduce one more word of advice: Check your emotions.

This isn’t quite a strategy (like “go upstream”) or a tactic (like using date filters to find the origin of a fact). For lack of a better word, I am calling this advice a habit.

The habit is simple. When you feel strong emotion–happiness, anger, pride, vindication–and that emotion pushes you to share a “fact” with others, STOP. Above all, these are the claims that you must fact-check.

Why? Because you’re already likely to check things you know are important to get right, and you’re predisposed to analyze things that put you an intellectual frame of mind. But things that make you angry or overjoyed, well… our record as humans are not good with these things.

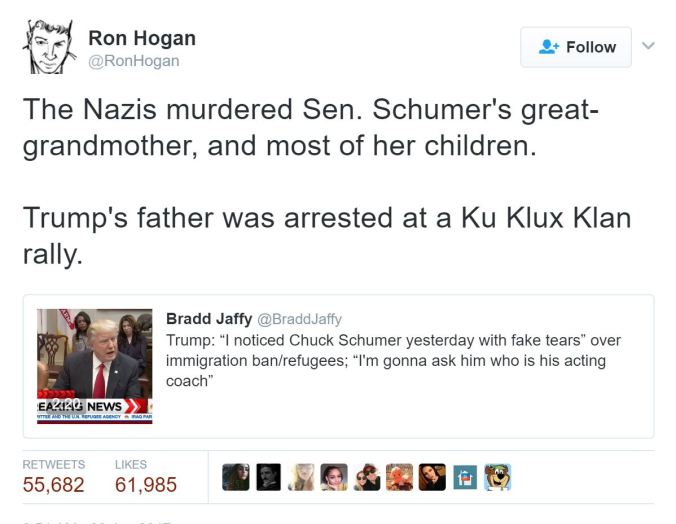

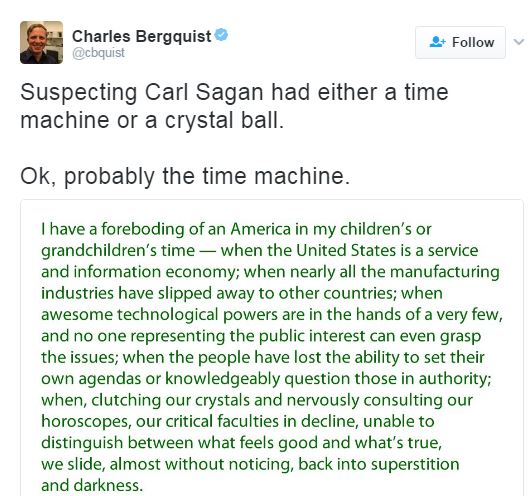

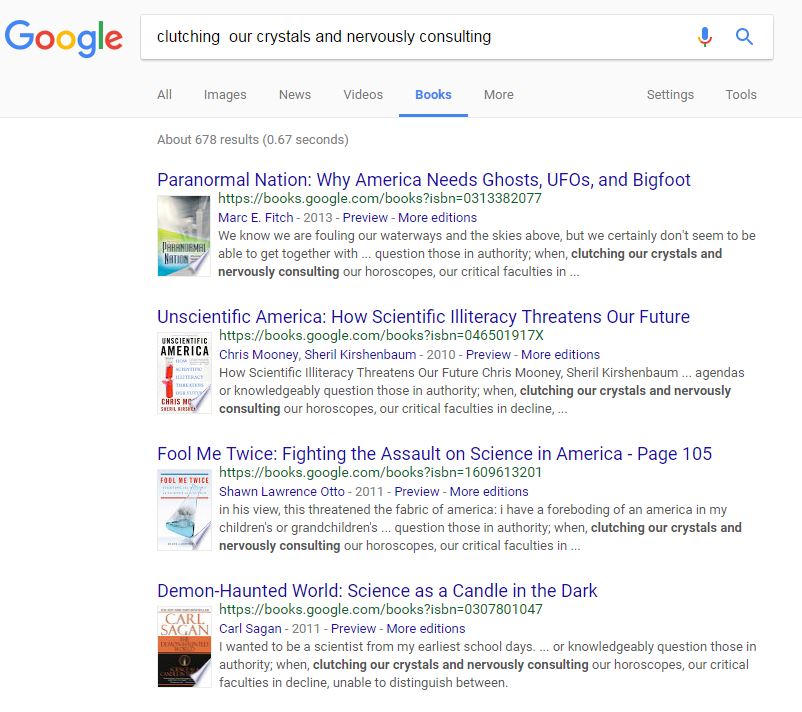

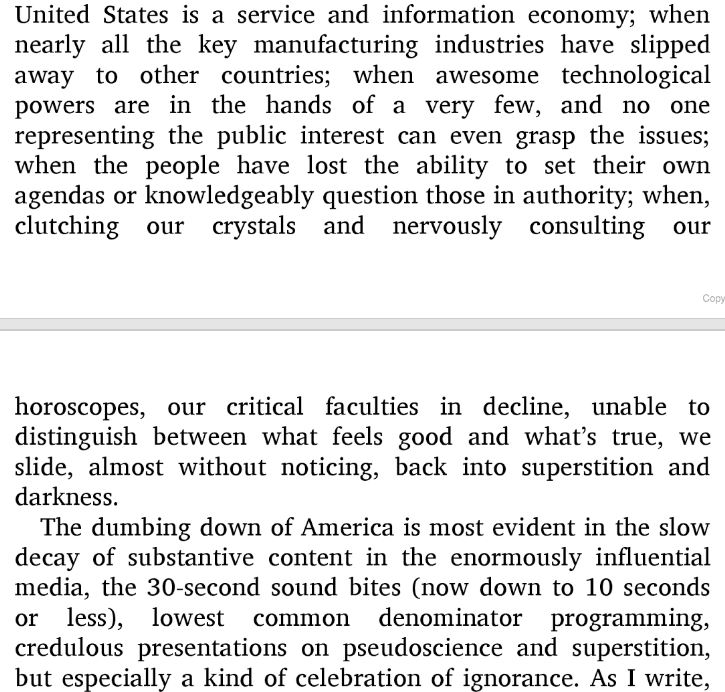

As an example, I’ll cite this tweet that crossed my Twitter feed:

You don’t need to know much of the background of this tweet to see its emotionally charged nature. President Trump had insulted Chuck Schumer, a Democratic Senator from New York, and characterized the tears that Schumer shed during a statement about refugees as “fake tears.” This tweet reminds us that that Senator Schumer’s great-grandmother died at the hands of the Nazis, which could explain Schumer’s emotional connection to the issue of refugees.

Or does it? Do we actually know that Schumer’s great-grandmother died at the hands of the Nazis? And if we are not sure this is true, should we really be retweeting it?

Our normal inclination is to ignore verification needs when we react strongly to content, and researchers have found that content that causes strong emotions (both positive and negative) spreads the fastest through our social networks.See "What Emotion Goes Viral the Fastest?" by Matthew Shaer. Savvy activists and advocates take advantage of this flaw of ours, getting past our filters by posting material that goes straight to our hearts.

Use your emotions as a reminder. Strong emotions should become a trigger for your new fact-checking habit. Every time content you want to share makes you feel rage, laughter, ridicule, or even a heartwarming buzz, spend 30 seconds fact-checking. It will do you well.

II

4

When fact-checking a particular claim, quote, or article, the simplest thing you can do is to see if someone has already done the work for you.

This doesn’t mean you have to accept their finding. Maybe they assign a claim “four Pinocchios,” but you would rate it three. Maybe they find the truth “mixed,” but honestly it looks “mostly false” to you.

Regardless of the finding, a reputable fact-checking site or subject wiki will have done much of the leg work for you: tracing claims to their source, identifying the owners of various sites, and linking to reputable sources for counterclaims. And that legwork, no matter what the finding, is probably worth ten times your intuition. If the claims and the evidence they present ring true to you, or if you have built up a high degree of trust in the site, then you can treat the question as closed. But even if you aren’t satisfied, you can start your work from where they left off.

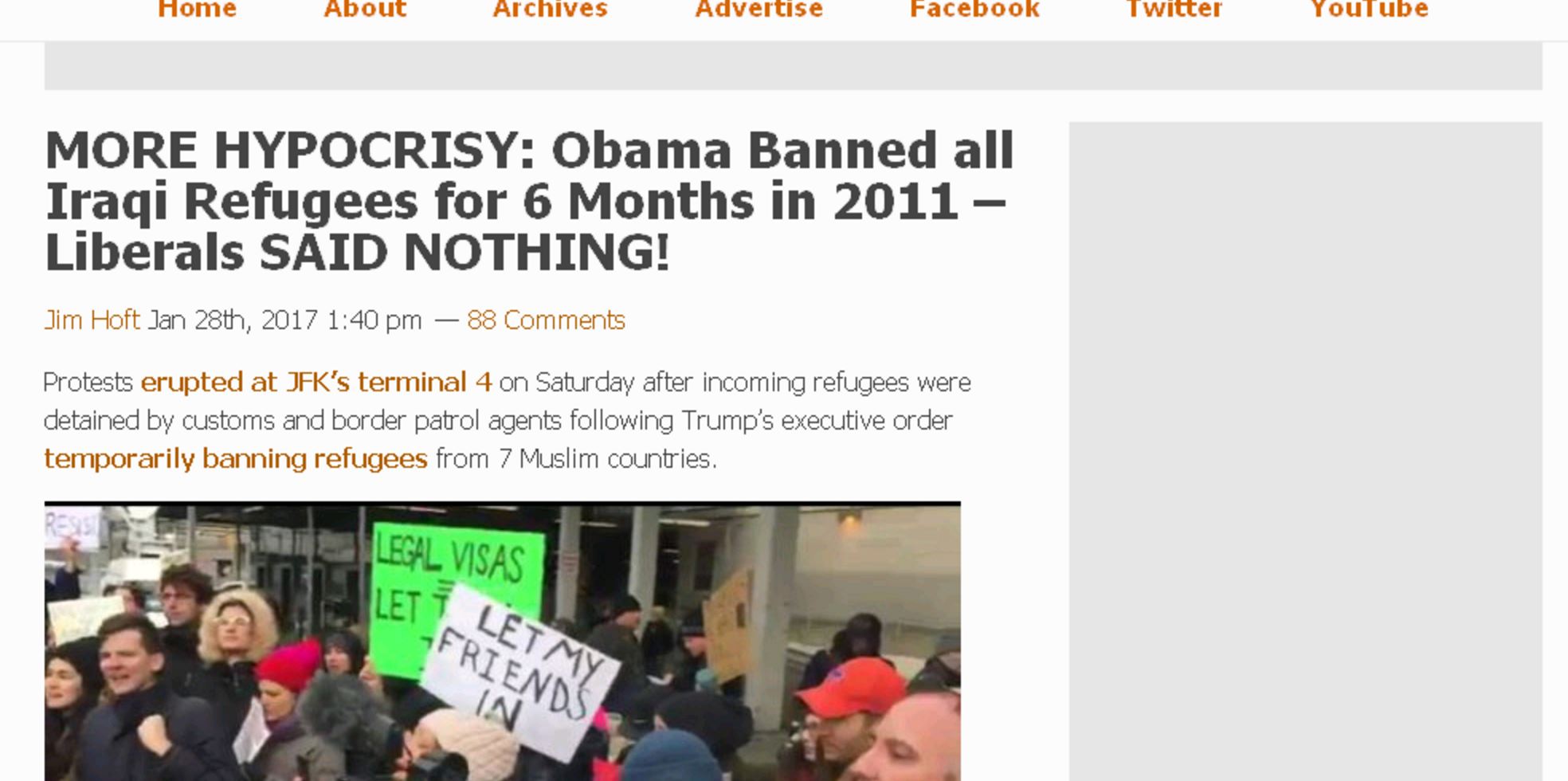

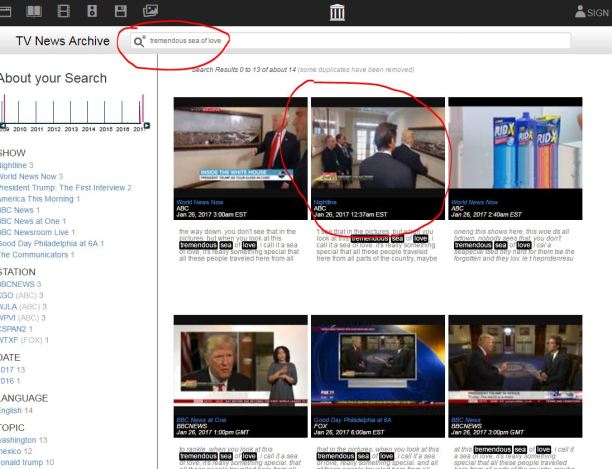

You can find previous fact-checking by using the “site” option in search engines such as Google and DuckDuckGo to search known and trusted fact-checking sites for a given phrase or keyword. For example, if you see this story,

then you might use this query, which checks a couple known fact-checking sites for the keywords: obama iraqi refugee ban 2011. Let’s use the DuckDuckGo search engine to look for the keywords:

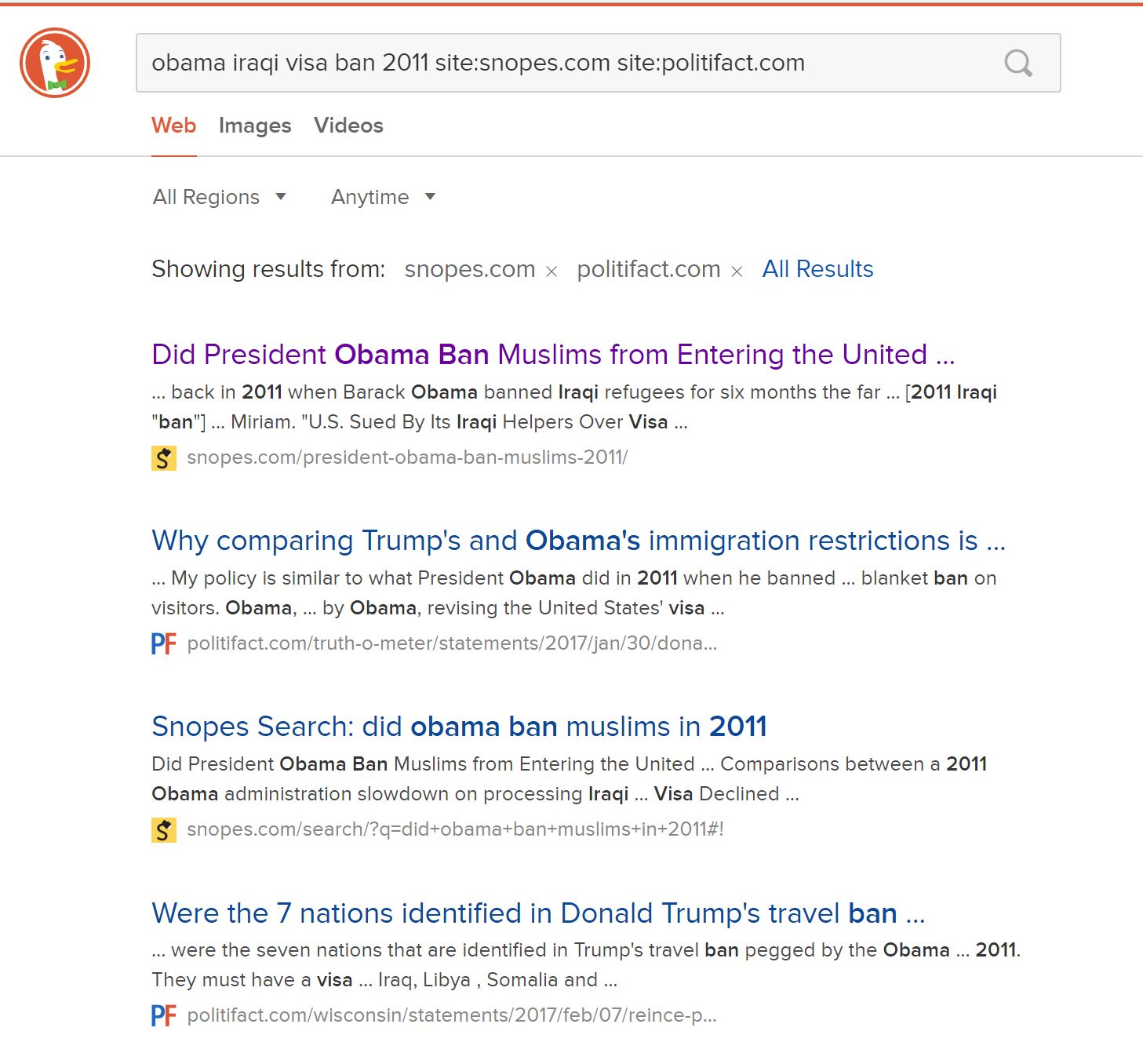

obama iraqi visa ban 2011 site:snopes.com site:politifact.com

Here are the results of our search:

You can see the search here. The results show that work has already been done in this area. In fact, the first result from Snopes answers our question almost fully. Remember to follow best search engine practice: scan the results and focus on the URLs and the blurbs to find the best result to click in the returned result set.

There are similar syntaxes you can use in Google, but for various reasons this particular search is easier in DuckDuckGo.

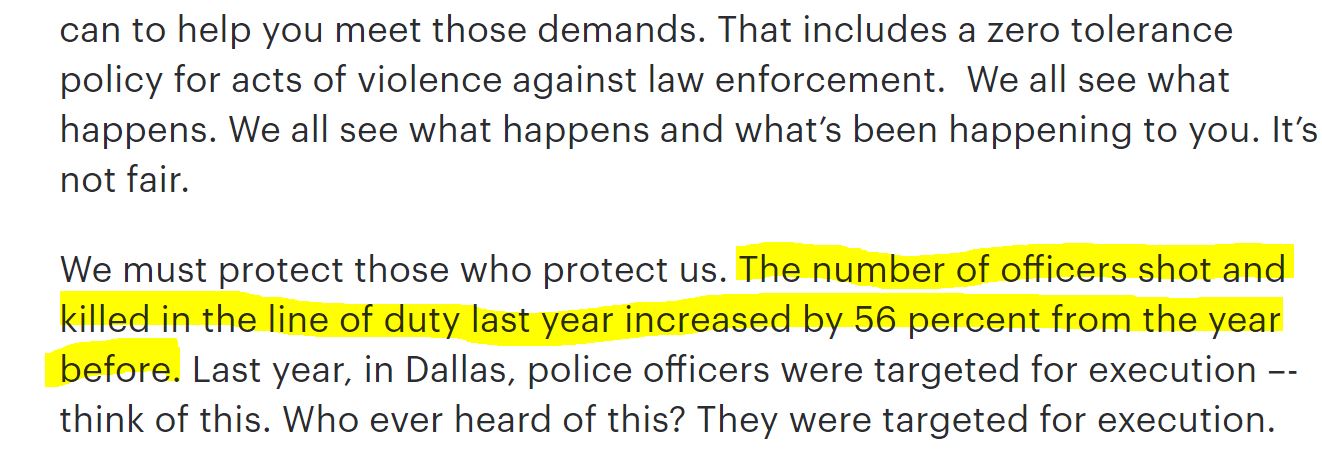

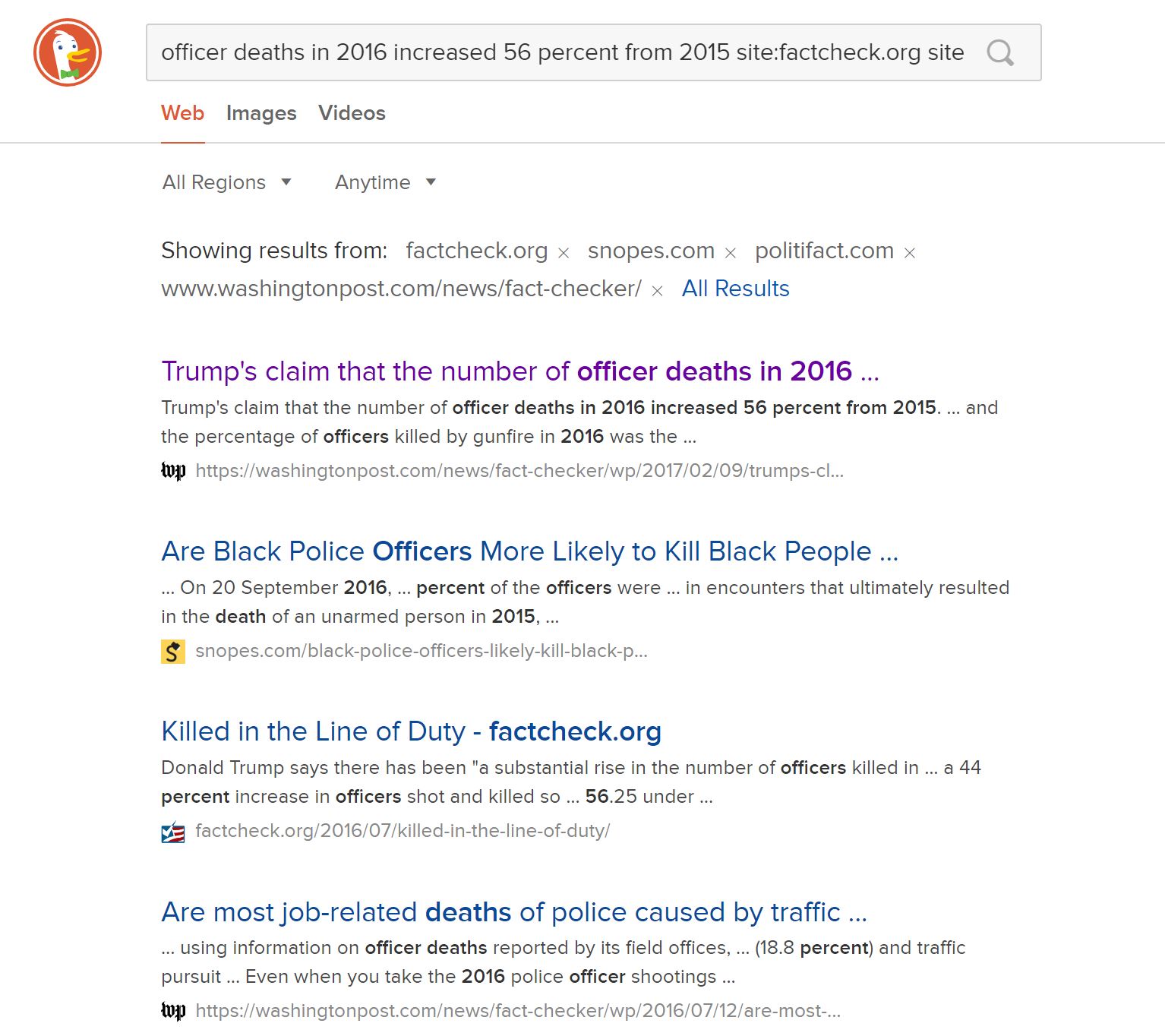

Let’s look at another claim, this time from the President. This claim is that police officer deaths increased 56 percent from 2015 to 2016. Here it is in context:

Let’s ramp it up with a query that checks four different fact-checking sites:

officer deaths 2016 increased 56 percent from 2015 site:factcheck.org site:snopes.com site:politifact.com site:www.washingtonpost.com/news/fact-checker/

This gives us back a helpful array of results. The first, from the Washington Post, actually answers our question directly, but some of the others provide some helpful context as well.

Going to the Washington Post lets us know that this claim is, for all intents and purposes, true. We don’t need to go further, unless we want to.

5

The following organizations are generally regarded as reputable fact-checking organizations focused on U.S. national news:

Respected specialty sites cover niche areas such as climate or celebrities. Here are a few examples:

There are many fact-checking sites outside the U.S. Here is a small sample:

6

Wikipedia is broadly misunderstood by faculty and students alike. While Wikipedia must be approached with caution, especially with articles that are covering contentious subjects or evolving events, it is often the best source to get a consensus viewpoint on a subject. Because the Wikipedia community has strict rules about sourcing facts to reliable sources, and because authors must adopt a neutral point of view, its articles are often the best available introduction to a subject on the web.

The focus on sourcing all claims has another beneficial effect. If you can find a claim expressed in a Wikipedia article, you can almost always follow the footnote on the claim to a reliable source. Scholars, reporters, and students can all benefit from using Wikipedia to quickly find authoritative sources for claims.

As an example, consider a situation where you need to source a claim that the Dallas 2016 police shooter was motivated by hatred of police officers. Wikipedia will summarize what is known about his motives and, more importantly, will source each claim, as follows:

Chief Brown said that Johnson, who was black, was upset about recent police shootings and the Black Lives Matter movement, and “stated he wanted to kill white people, especially white officers.”[4][5] A friend and former coworker of Johnson’s described him as “always [being] distrustful of the police.”[61] Another former coworker said he seemed “very affected” by recent police shootings of black men.[64] A friend said that Johnson had anger management problems and would repeatedly watch video of the 1991 beating of Rodney King by police officers.[85]

Investigators found no ties between Johnson and international terrorist or domestic extremist groups.[66]

Each footnote leads to a source that the community has deemed reliable. The article as a whole contains over 160 footnotes. If you are researching a complex question, starting with the resources and summaries provided by Wikipedia can give you a substantial running start on an issue.

III

7

Our second move, after finding previous fact-checking work, is to “go upstream.” We use this move if previous fact-checking work was insufficient for our needs.

What do we mean by “go upstream”?

Consider this claim on the conservative site the Blaze:

Is this claim true?

Of course we can check the credibility of this article by considering the author, the site, and when it was last revised. We’ll do some of that, eventually. But it would be ridiculous to do it on this page. Why? Because like most news pages on the web, this one provides no original information. It’s just a rewrite of an upstream page. We see the indication of that here:

All the information here has been collected, fact-checked (we hope!), and written up by the Daily Dot. It’s what we call “reporting on reporting.” There’s no point in evaluating the Blaze’s page.

So what do we do? Our first step is to go upstream. Go to the original story and evaluate it. When you get to the Daily Dot, then you can start asking questions about the site or the source. And it may be that for some of the information in the Daily Dot article you’d want to go a step further back and check their primary sources. But you have to start there, not here.

8

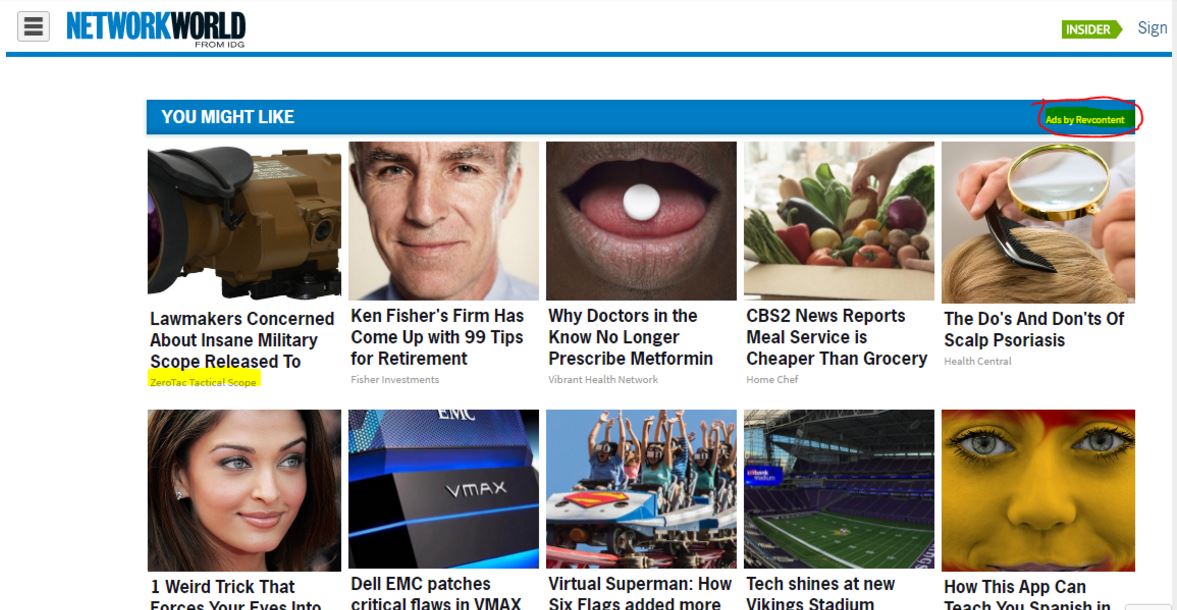

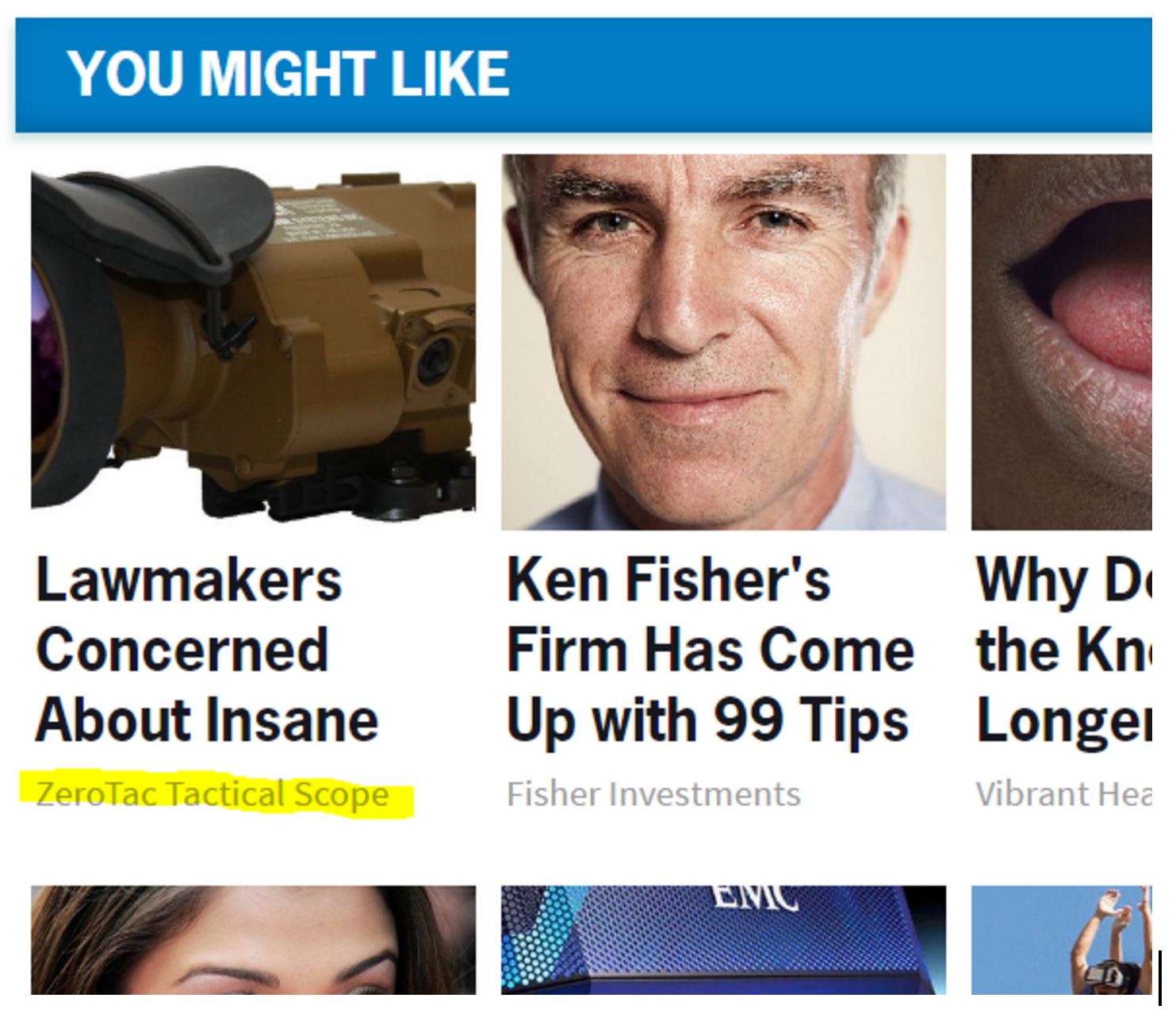

Our warning to “go upstream” before evaluating claims is particularly important with sponsored content. For instance, a lot of time on a site you’ll see “headlines” like these, which I pulled from a highly regarded technology magazine:

Look at the headline in the upper left corner. Are lawmakers really concerned about this insane military scope? Maybe. But note that Network World is not making this claim. Instead, the ZeroTac Tactical Scope company is making the claim:

It’s an ad served from another site into this page in a way that makes it look like a story.

However, sponsored content isn’t always purely an advertisement. Sometimes it provides helpful information. This piece below, for example, is an in-depth look at some current industry trends in information technology.

The source of this article is not InfoWorld, but the technology company Hewlett Packard, and the piece is written by a Vice President of Hewlett Packard, with no InfoWorld oversight. (Keep an eye out on the web for articles that have a “sponsored” indicator above or below them–they are more numerous than you might think!)

You can see how this is not just an issue with political news, but will be an issue in your professional life as well. If you go to work in a technology field and portray this article to your boss as “something I read on InfoWorld”, you’re doing a grave disservice to your company. Portraying a vendor-biased perspective as a neutral InfoWorld perspective is a mistake you might come to regret.

9

Rank the following news sources on how much sponsored content you believe their pages will feature: CNN, Buzzfeed, Washington Post, HuffPost, Brietbart, New York Times.

Individually, or in groups, visit the following pages and list all sponsored content you see, tallying up the total amount on each page. Then rank the sites from most sponsored content to least.

After you’ve ranked the websites, answer these questions:

10

Syndication–the process by which material from one site is published automatically to another site–can create confusion for readers who don’t understand it. It’s a often case where something is coming from “upstream” but appears not to be.

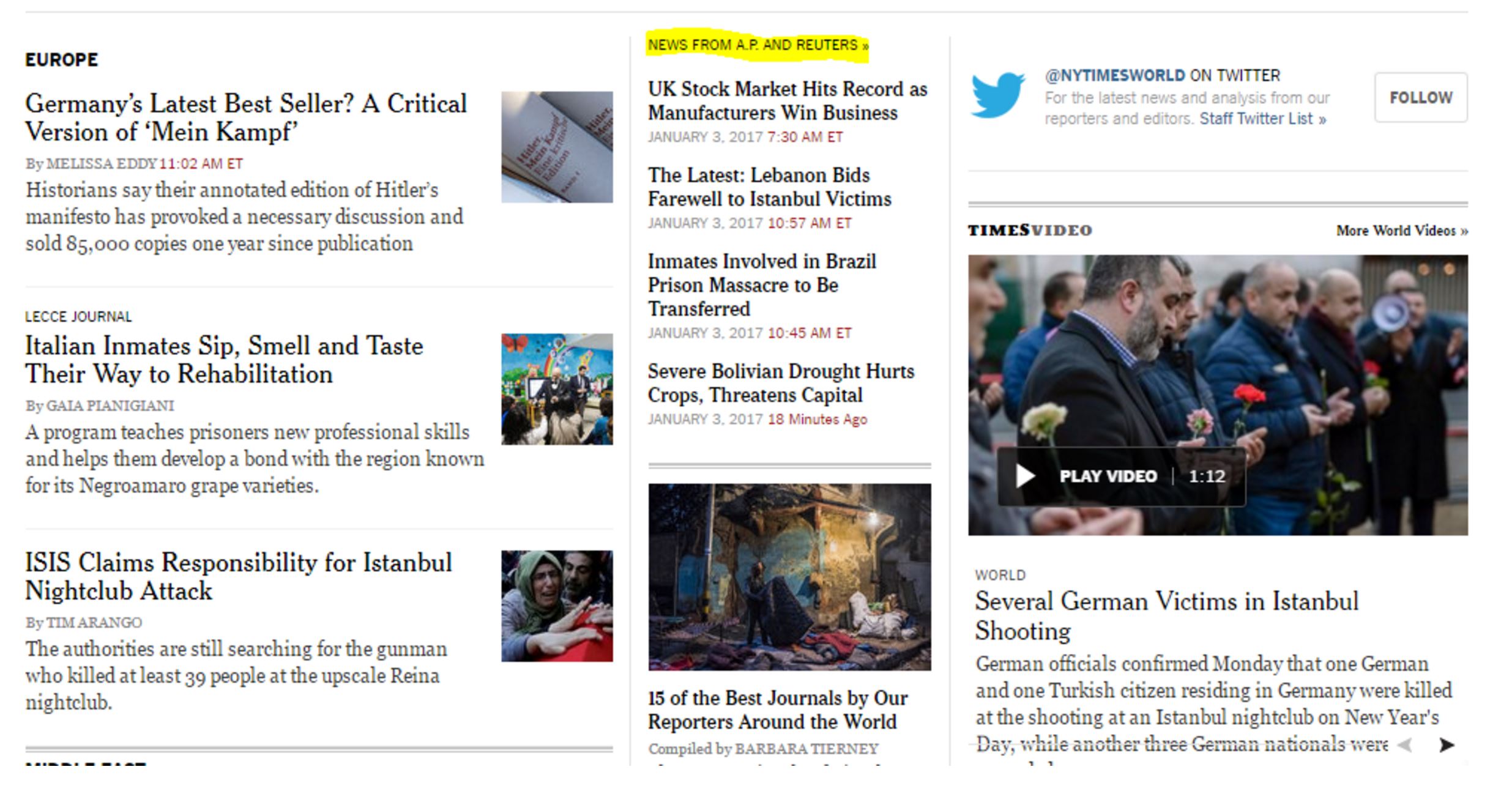

Consider this New York Times web page:

We see a set of stories on the left (“Germany’s Latest Best Seller”, “Isis Claims Responsibility”) written by New York Times staff, but also a thin column of stories in the middle of the page (“UK Stock Market Hits Record”) that are identified as being from the Associated Press.

You click through to a page that’s on the New York Times site, but not by the New York Times:

If you are going to evaluate the source of this article, your evaluation will have little to do with the New York Times. You’re going to focus on the reporting record of the Associated Press.

People get this wrong all the time. One thing that happens occasionally is that an article critical of a certain politician or policy suddenly disappears from the New York Times site, and people claim it’s a plot to rewrite the past. “Conspiracy!” they say. “They’re burying information!” they say. A ZOMG-level freakout follows.

It predominately turns out that the article that disappeared is a syndicated article. Associated Press articles, for example, are displayed on the site for a few weeks, then “roll off” and disappear from the site. Why? Because the New York Times only pays the Associated Press to show them on the site for a few weeks.

You’ll also occasionally see people complaining about a story from the New York Times, claiming it shows a New York “liberal bias” only to find the story was not even written by the New York Times, but by the Associated Press, Reuters, or some other syndicator.

Going upstream means following a piece of content to its true source, and beginning your analysis there. Your first question when looking at a claim on a page should be “Where did this come from, and who produced it?” The answer quite often has very little to do with the website you are looking at.

11

In the examples we’ve seen so far, it’s been straightforward to find the source of the content. The Blaze story, for example, clearly links to the Daily Dot piece so that anyone reading their summary is one click away from confirming it with the source. The New York Times makes apparent that the syndicated content is from the Associated Press, so checking the credibility of the source is readily available to you.

This is good internet citizenship. Articles on the web that repurpose other information or artifacts should state their sources, and, if appropriate, link to them. This matters to creators, because they deserve credit for their work. But it also matters to readers who need to check the credibility of the original sources.

Unfortunately, many people on the web are not good citizens. This is particularly true with material that spreads quickly as hundreds or thousands of people share it–so-called “viral” content.

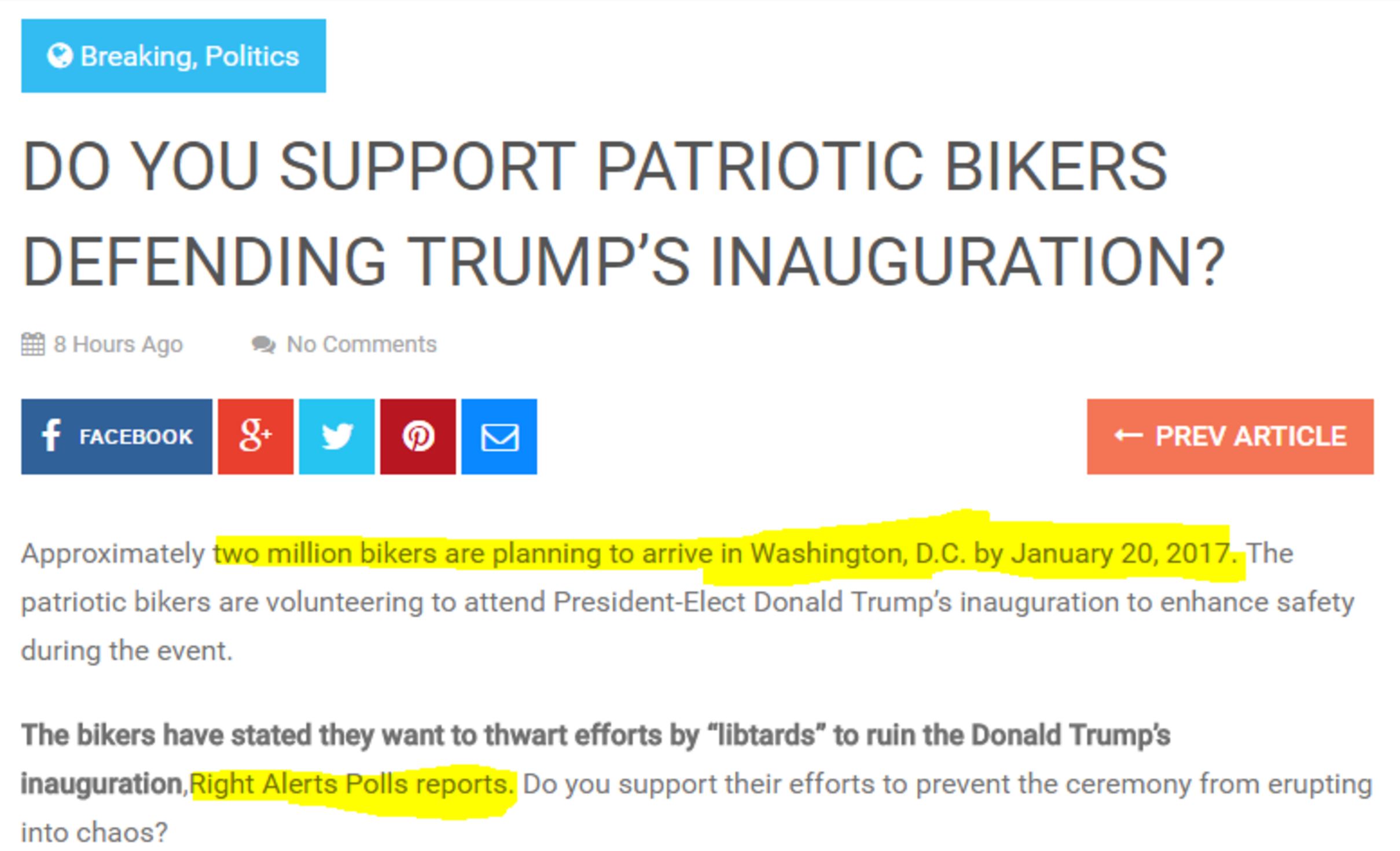

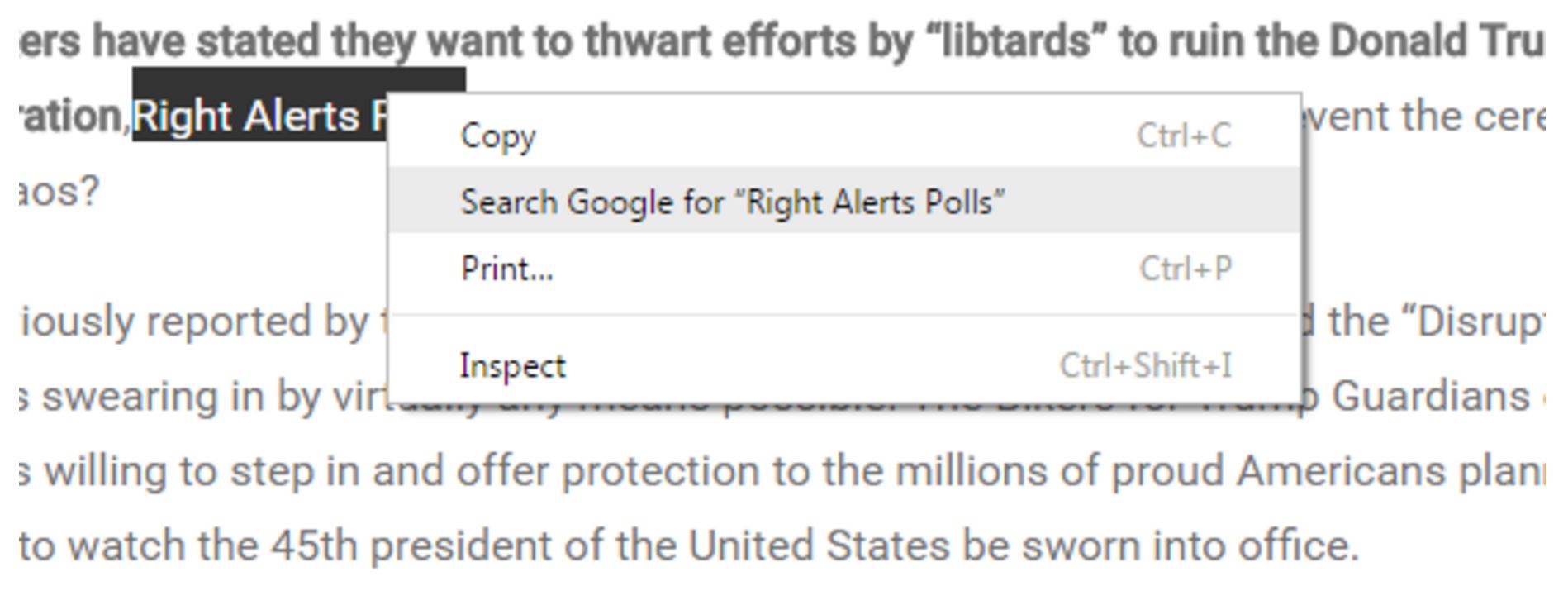

When that information travels around a network, people often fail to link it to sources, or hide them altogether. For example, here is an interesting claim that two million bikers are going to show up for President-elect Trump’s inauguration. Whatever your political persuasion, that would be a pretty amazing thing to see.

But the source of the information, Right Alerts Polls, is not linked.

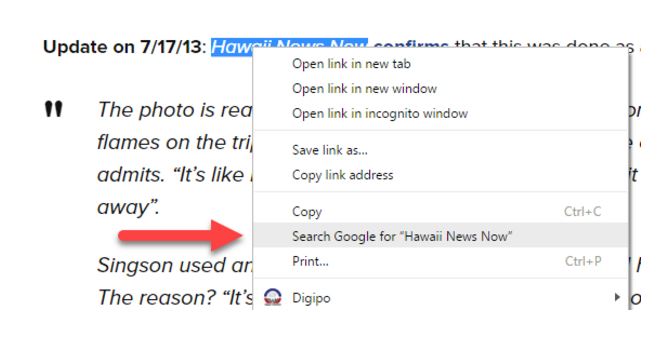

Here’s where we show our first trick. Using the Chrome web browser, select the text “Right Alerts Polls.” Then right-click your mouse (control-click on a Mac), and choose the option to search Google for the highlighted phrase.

Your computer will execute a search for “Right Alerts Polls.” (Remember this right-click/control-click action–it’s going to be the foundation of a lot of stuff we do.)

To find the story, add “bikers” to the end of the search:

We find our upstream article right at the top. Note that if you do not use Chrome, there are analogues of this method in other browsers as well. Right-clicking in Internet Explorer will allow you to search Bing, for example. If you want, you can always do this the slightly longer way by going to Google and typing in the search terms.

So are we done here? Have we found the source?

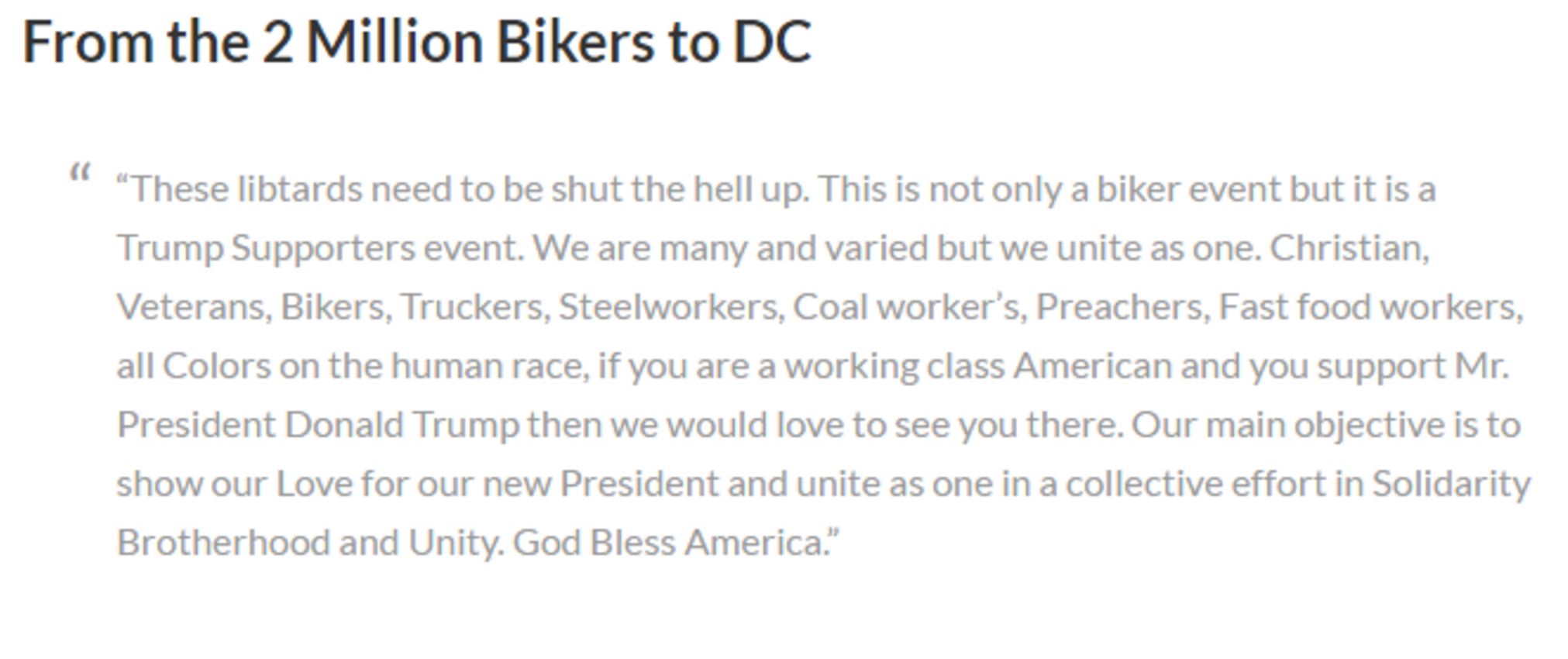

Nope. When we click through to the supposed source article, we find that this article doesn’t tell us where the information is coming from either. However, it does have an extended quote from one of the “Two Million Bikers” organizers:

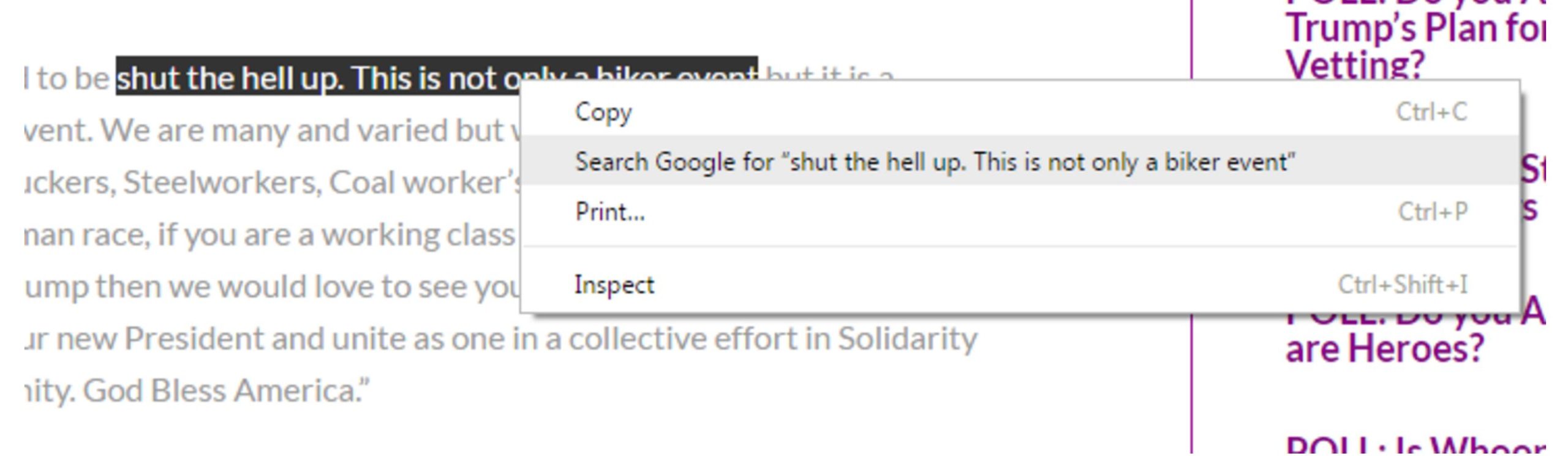

So we just repeat our technique here, and select a bit of text from the quote and right-click/control-click. Our goal is to figure out where this quote came from, and searching on this small but unique piece of it should bring it close to the top of the Google results.

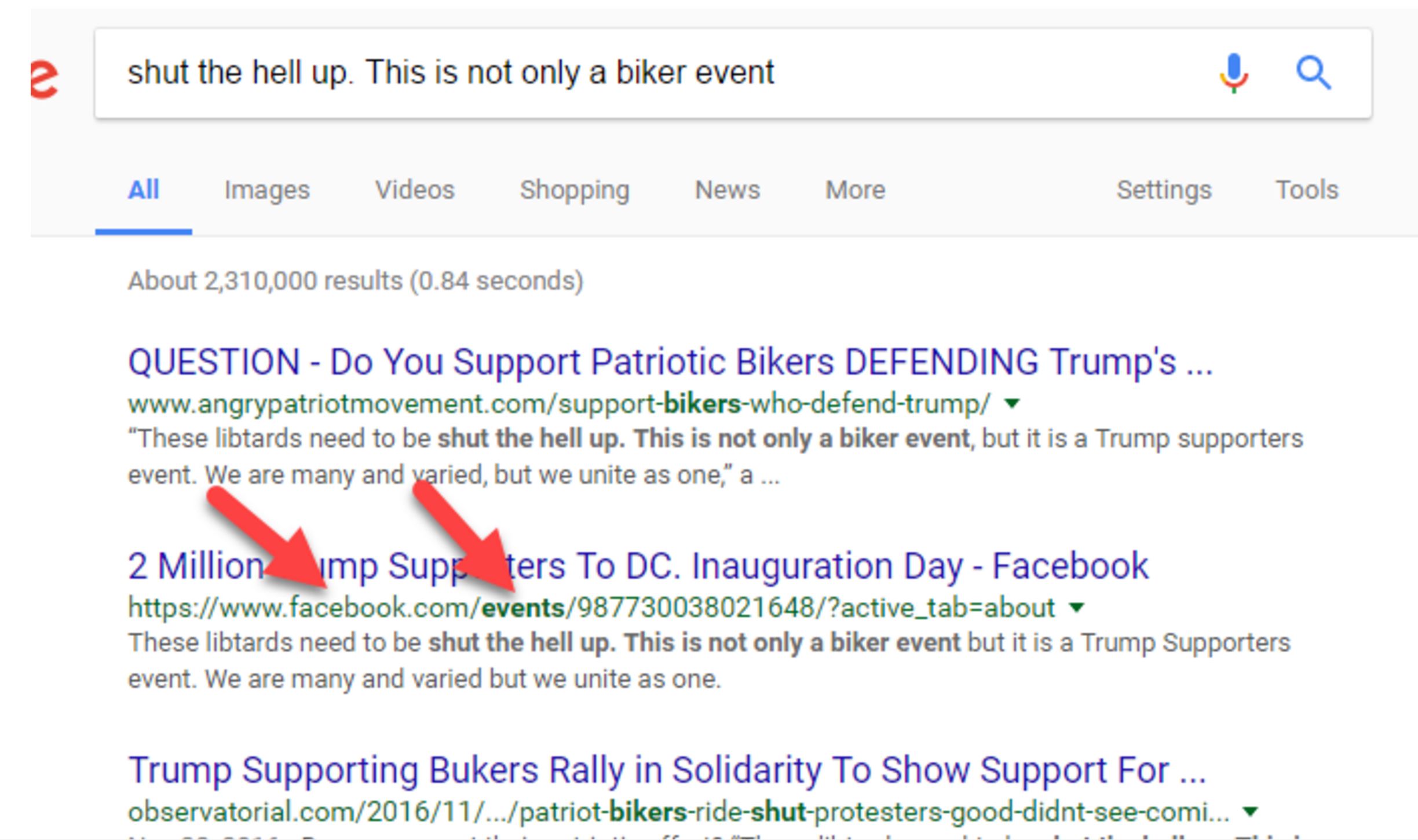

When we search this snippet of the quote, we see that there are dozens of articles covering this story, using the the same quote and sometimes even the same headline. But one of those results is the actual Facebook page for the event, and if we want a sense of how many people are committing, then this is a place to start.

This also introduces us to another helpful practice: when scanning search results, novices scan the titles. Pros scan the URLs beneath the titles, looking for clues as to which sources are best. (Be a pro!)

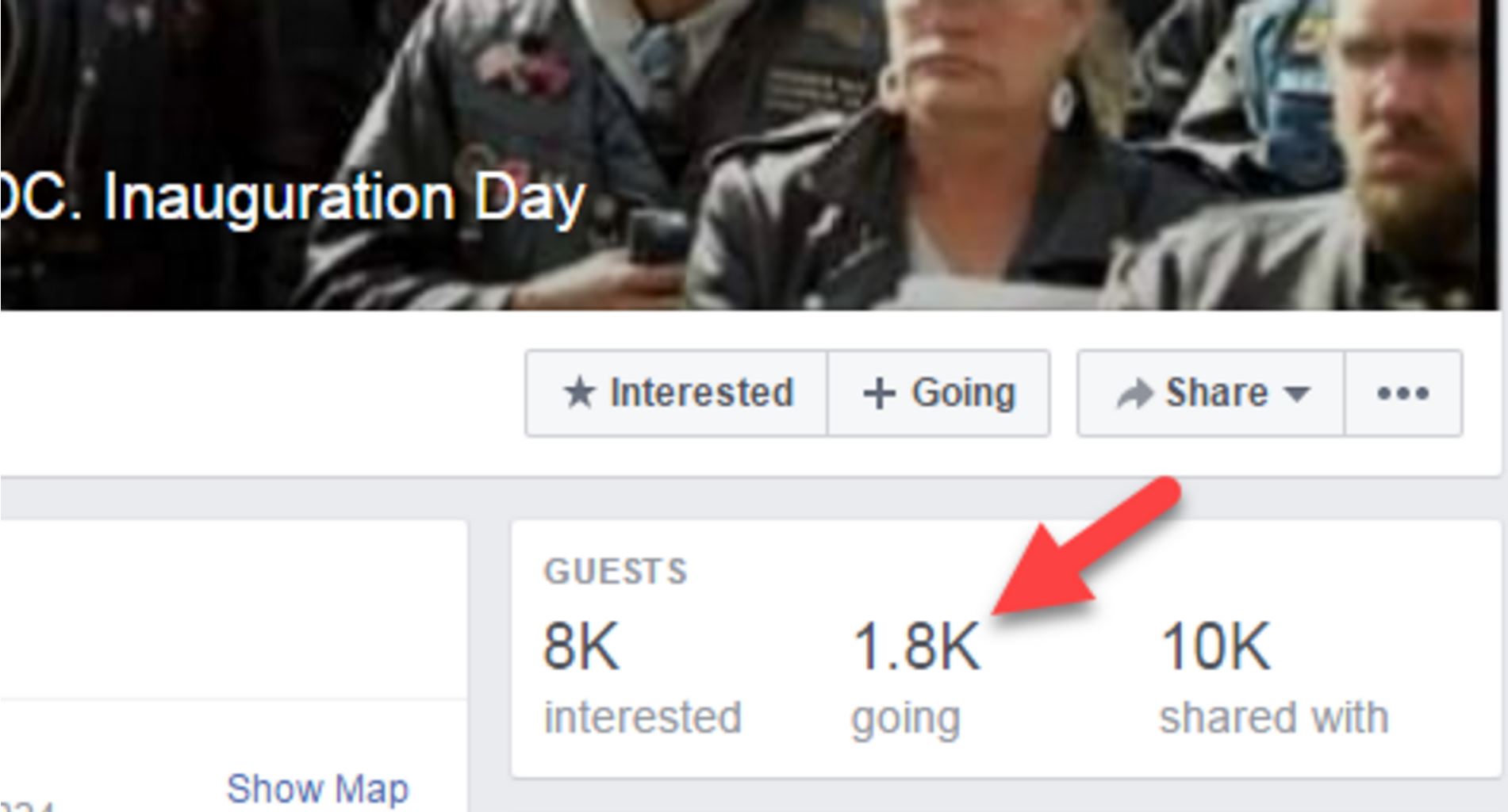

So we go to the “Two Million Biker” Facebook event page, and take a look. How close are they to getting two million bikers to commit to this?

Well…it looks like about 1,800. That’s nothing to sneer at–organizing is hard, and people have lives to attend to. Getting people to give up time for political activity is tough. But it’s pretty short of the “two million bikers” most of these articles were telling us were going to show up.

When we get into how to rate articles on the DigiPo site as true or false, likely or unlikely, we’ll talk a bit about how to write up the evaluation of this claim. Our sense is the rating here is either “Mostly False” or “Unlikely”–there are people planning to go, that’s true, but the importance of the story was based around the scale of attendance, and all indications seem to be that attendance is shaping up to be about a tenth of one percent (0.1%) of what the other articles promised.

Importantly, we would have learned none of this had we decided to evaluate the original page. We learned this by going upstream.

12

Another type of viral content on the internet is photography. It is also some of the most difficult to track upstream to a source. Here’s a picture that showed up in my stream the other day:

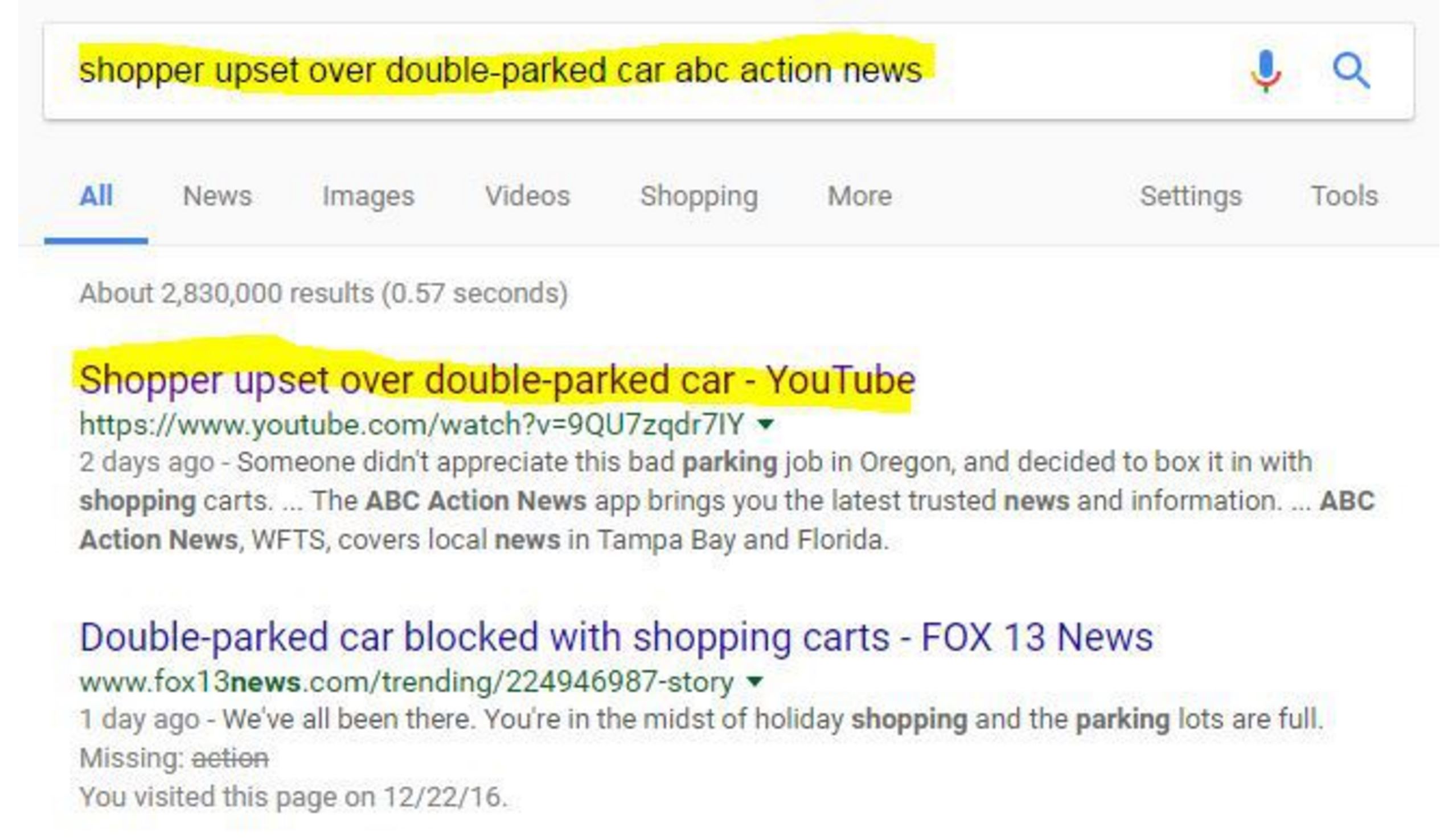

OK, so what’s the story here? To get more information, I pull the textual information off the image and throw it in a Google search:

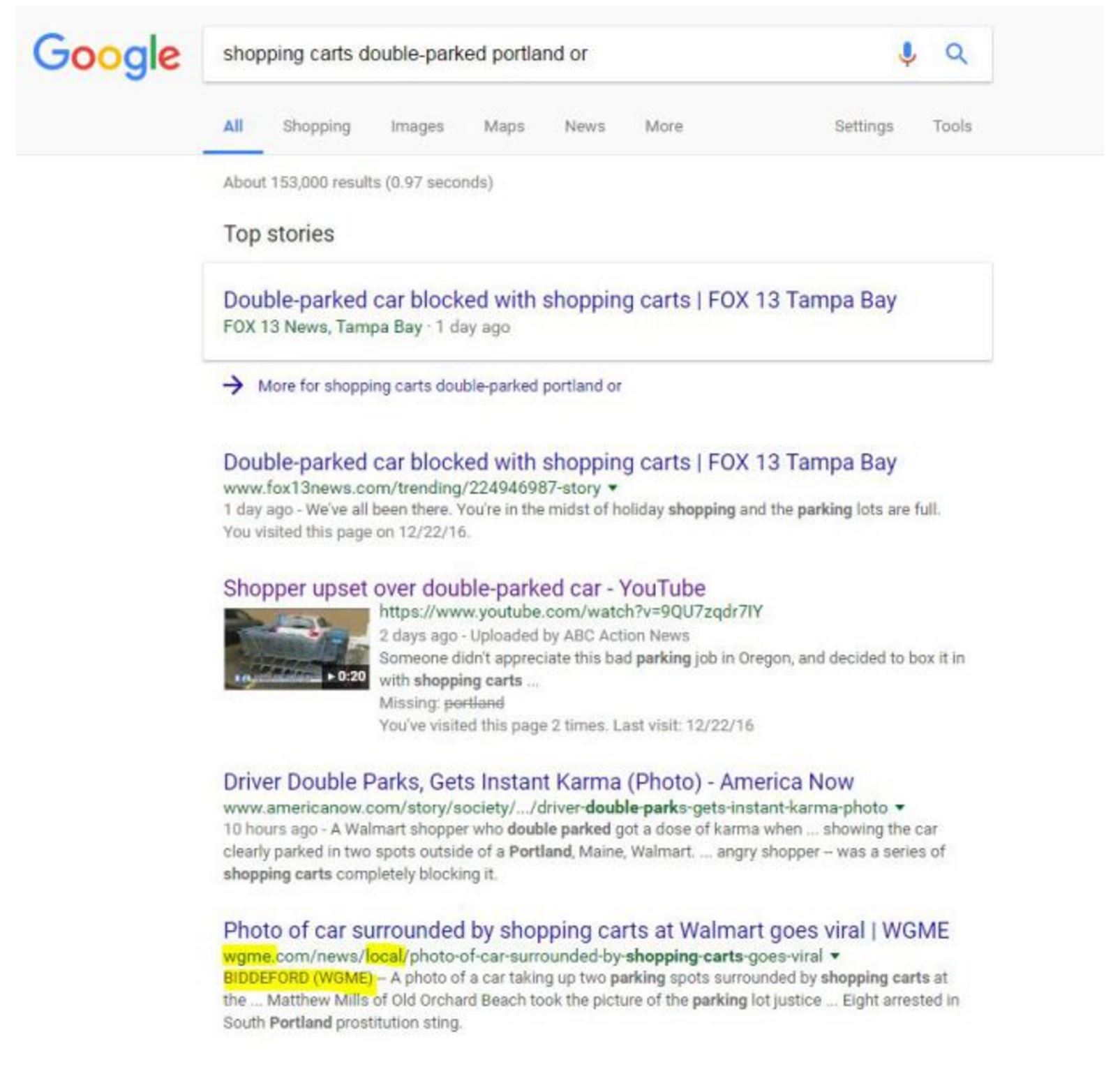

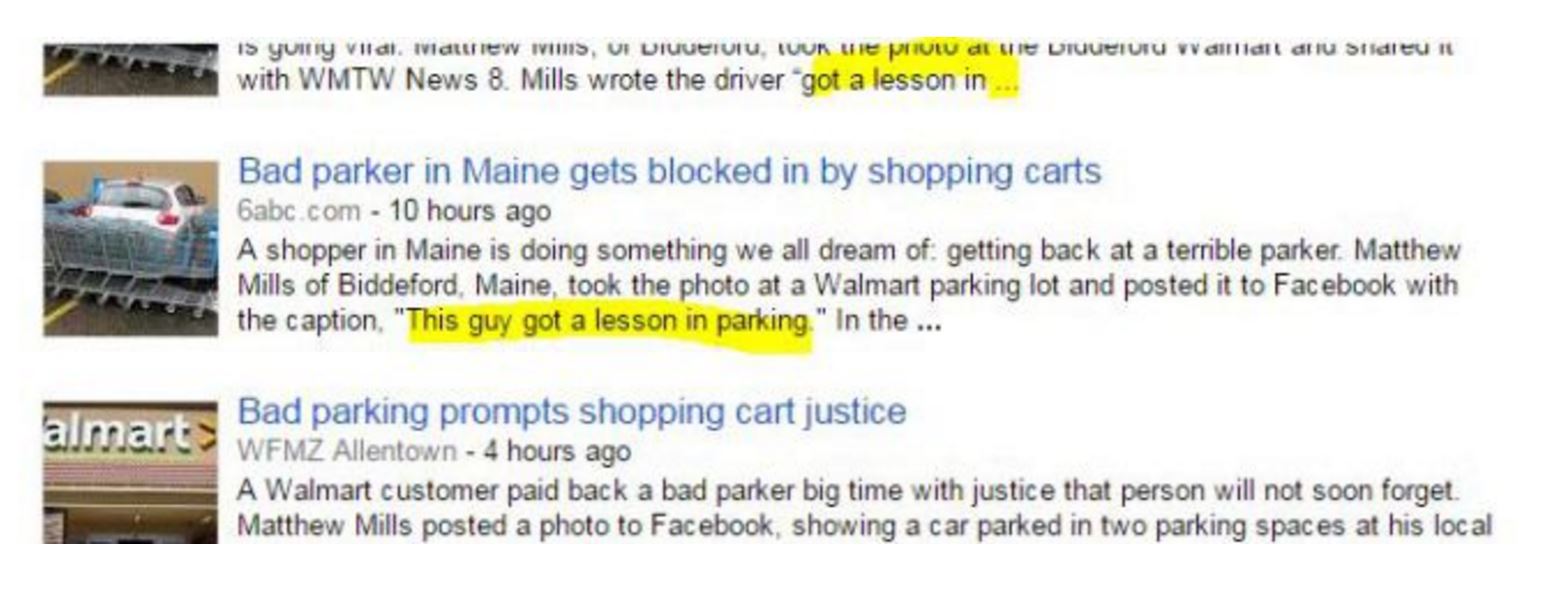

This brings me to a YouTube video that tells me this was taken “outside a Portland, Oregon Walmart” and has been shared “hundreds of times since yesterday.” So now we search with this new information. This next result shows you why you always want to look past the first result:

Which one of these items should I click? Again, the idea here is to get “upstream” to something that is closer to the actual event. One way to do that is to find the earliest post, and we’ll use that in a future task. But another way to get upstream is to get closer to the event in space. Think about it: who is more likely to get the facts of a local story correct, the local newspaper or a random blog?

So as you scan the search results, look at the URLs. Fox 13 News has it in “trending.” AmericaNow has it in the “society” section.

But the WGME link has the story in a “news/local/” directory. This is interesting, because the other site said it happened in Oregon, and here the location is clearly Maine. But this URL pattern is a strong point in the website’s favor.

Further indications here that it might be a good source is that we see in the blurb it mentions the name of the photographer, “Matthew Mills.” The URL plus the specificity of the information tell us this is the way to go.

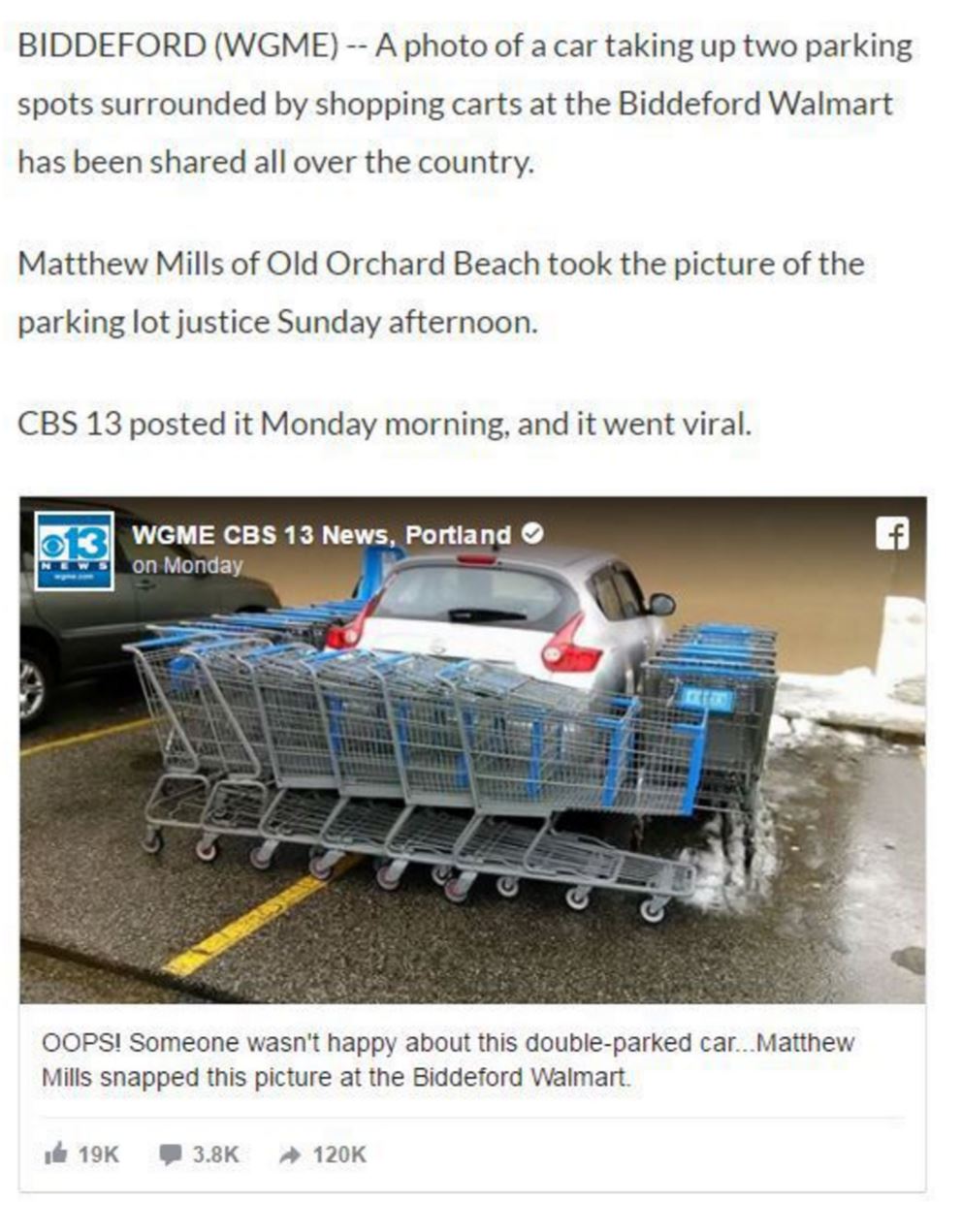

This takes me to what looks like the news page where it went viral, which embeds the original post.

We see here that the downstream news report we found first had a bunch of things wrong. It wasn’t in Portland, Oregon—it was in Biddeford, which is near Portland, Maine. It hasn’t been shared “hundreds of times”–it’s been shared hundreds of thousands of times. And it was made viral by a CBS affiliate, a fact that ABC Action News in Tampa doesn’t mention at all.

OK, let’s go one more step. Let’s look at the Facebook page where Matthew Mills shared it. Part of what we want to see is whether or not this was viral before CBS picked it up. I’d also like to double check that Mills is really from the Biddeford area and see if he was responsible for the shopping carts or just happened upon this scene.

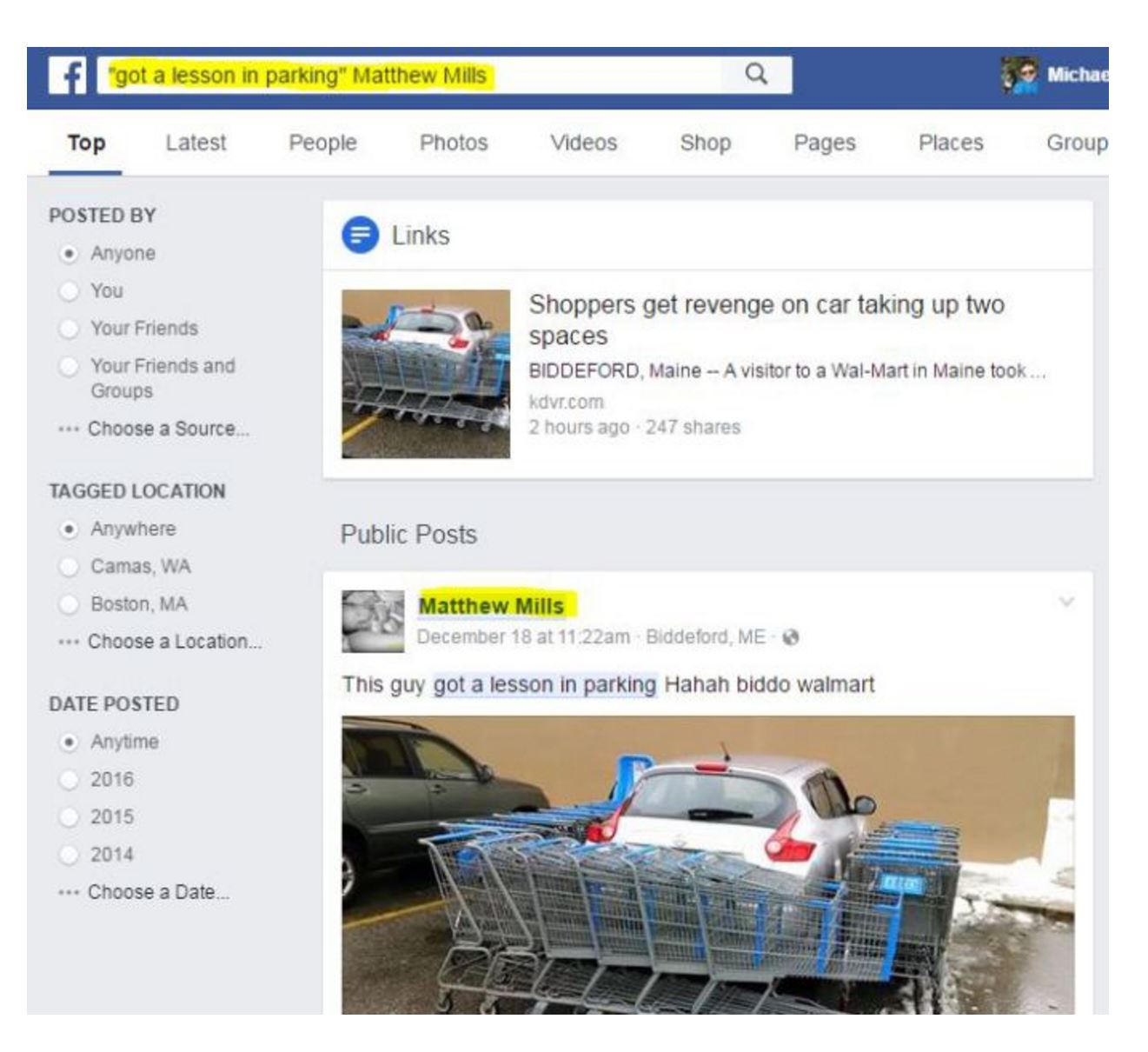

The news post does not link back to the original, so we search on Matthew Mills again. There, we find some news outlets mentioning the original caption by Mills: “This guy got a lesson in parking.”

That’s not the same as the caption that the news station put up–maybe it’s what Mills originally used. We pump “’got a lesson in parking’ Matthew Mills” into Facebook, and bingo, we get the original post:

And here’s where we see something unpleasant about news organizations. They cut other news organizations out of the story, every time. So they say this has been shared hundreds of times because in order to say it has been shared hundreds of thousands of times they’d have to mention it was popularized by a CBS affiliate. So they cut CBS out of the story.

This practice can make it easier to track something down to the source. News organizations work hard to find the original source if it means they can cut other news organizations out of the picture. But it also tends to distort how virality happens. The picture here did not magically become viral—it became viral largely due to the reach of WGME.

Incidentally, we also find answers to other questions in the Matthew Mills version: he took the picture but didn’t arrange the carts, and he really is from Old Orchard Beach.

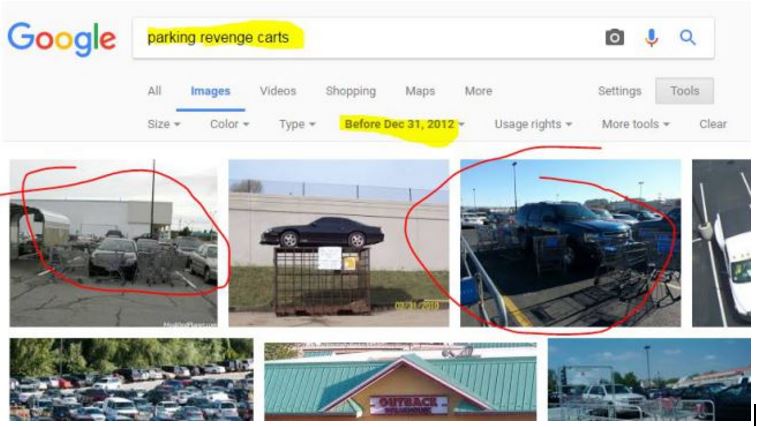

Just because we’re extra suspicious, we throw the image into Google Images to see if maybe this is a recycled image. Sometimes people take old images and pretend the images are theirs–changing only the the supposed date and location. A Google reverse image search (see below) shows that it does not appear to be the case here, although in doing that we find out this is a very common type of viral photo called a “parking revenge” photo. The specific technique of circling carts around a double-parked car dates back to at least 2012:

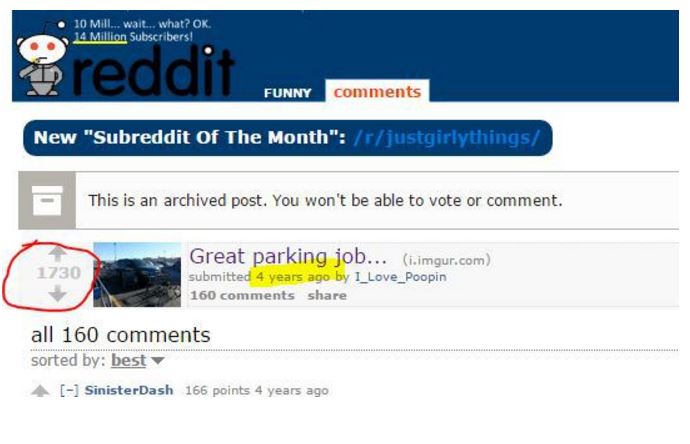

When we click through we can see that the practice was popularized, at least to some extent, by Reddit users. See, for instance, this post from December 2012:

So that’s it. It’s part of a parking revenge meme that dates back at least four years, and was popularized by Reddit. This particular one was shot by Matthew Mills in Biddeford, Maine, who was not the one who circled the carts. And it became viral through the reshare provided by a local Maine news station.

13

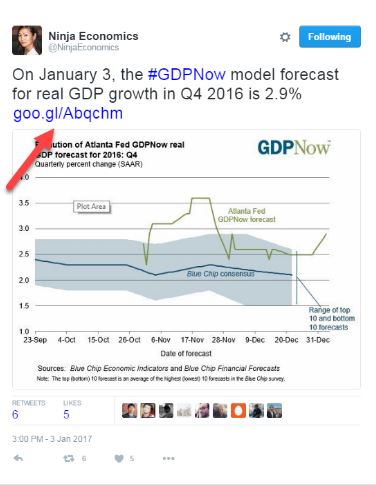

Most of the time finding the origin of an image on Twitter is easy. Just follow the links. For instance, take the chart in this tweet from Twitter user @NinjaEconomics. Should you evaluate it it by figuring out who @NinjaEconomics is?

Nope. Just follow that link to the source. Links are usually the last part of a tweet.

If you do follow that link, the chart is there, with a bunch more information about the data behind it and how it was produced. It’s from the Atlanta Federal Reserve, and it’s the Fed–not @NinjaEconomics–that you want to evaluate.

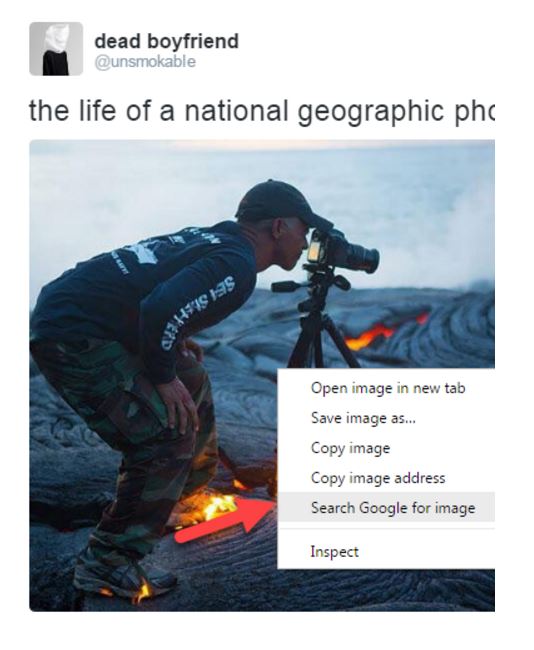

But sometimes people will post a photo that has no source, as this person does here:

So we have questions.

First, is this actually a National Geographic photographer? More importantly, is this real? Is that lava so hot that it will literally set a metal tripod on fire? That seems weird, but we’re not lava experts.

There’s no link here, so we’re going to use reverse image search. If you’re using Google Chrome as a browser, put the cursor over the photo and right-click (control-click on a Mac). A “context menu” will pop up and one of the options will be “Search Google for image.”

(For the sake of narrative simplicity we will show solutions in this text as they would be implemented in Chrome. Classes using this text are advised to use Chrome where possible. The appendix contains notes about translating these tactics to other browsers, and you can of course search the web for the Firefox and Safari corollaries.)

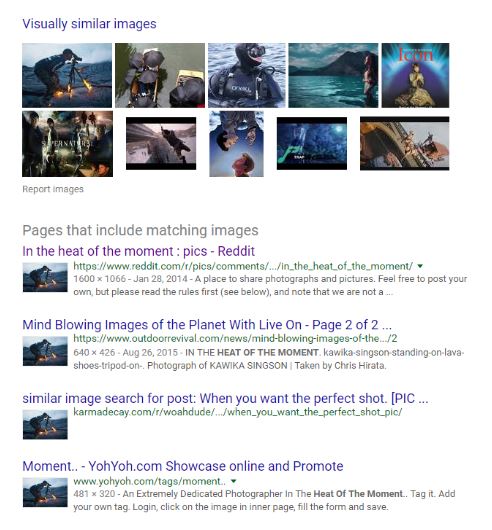

When we reverse search this image we find a bunch of pages that contain the photo, from a variety of sites. One of the sites returned is Reddit. Reddit is a site that is famous for sharing these sorts of photos, but it also has a reputation for having a user base that is very good at spotting fake photos.

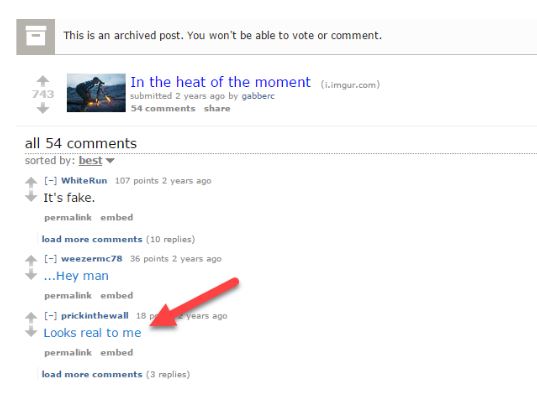

When we go to the Reddit page we find there is an argument there over whether the photo is fake or not. But again, Reddit is not our source here–we need to go further upstream. So we click the link in the Reddit forum that says it’s real and get taken to an article where they actually talk to the photographer:

That brings us to one of the original stories about this photo:

Now we could stop here and just read the headline. But all good fact-checkers know that headlines lie. So we read the article down to the bottom:

For this particular shot, Singson says, “Always trying to be creative, I thought it would be pretty cool (hot!) to take a lava pic with my shoes and tripod on fire while photographing lava.”

This may be a bit pedantic, but I still don’t know if this was staged. Contrary to the headline the photographer doesn’t say lava made his shoes catch on fire. He says he wanted to take a picture of himself with his shoes on fire while standing on lava.

So did his shoes catch on fire, or did he set them on fire? I do notice at the bottom of this page though that this is just a retelling of an article published elsewhere; it’s not this publication who talked to the photographer! It’s a similar situation to what we saw in an earlier chapter, where the Blaze was simply retelling a story that was investigated by the Daily Dot.

In webspeak, “via” means you learned of a story or photo from someone else. In other words, we still haven’t gotten to the source. So we lumber upstream once again, to the PetaPixel site from whence this came. When we go upstream to that site, we find an addendum on the original article:

So a local news outfit has confirmed the photographer did use an accelerant. The photograph was staged. Are we done now?

Not quite. You know what the next step is, right?

Go upstream to Hawaii News Now!

So we do that. We click the link, and we find the quote is good. I like Hawaii News Now for another reason–they are a local news service, so they know a bit about lava fields. That’s probably why they asked the question no one else seemed to ask: “Is that really possible?”

Finally, let’s find out about Hawaii News Now. We start by selecting Hawaii News Now and using our Google search option:

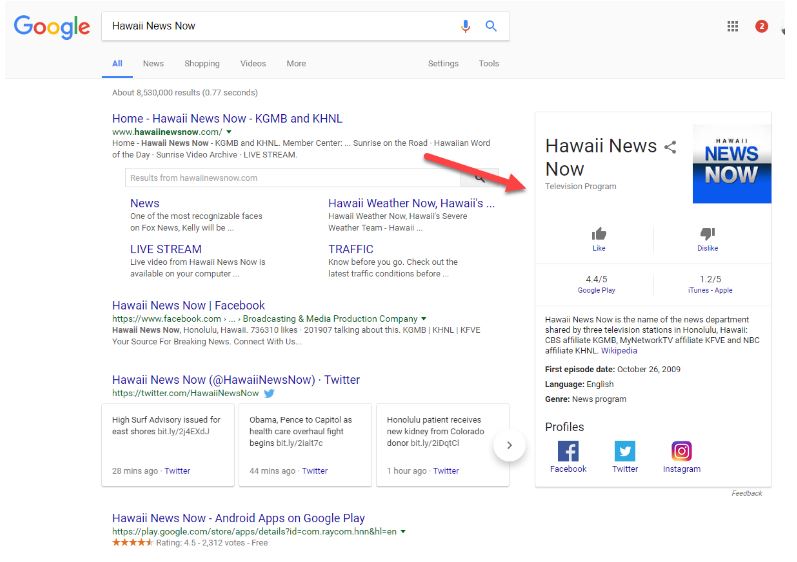

And what we get back is pretty promising: there’s a Google knowledge panel that comes up that tells us it’s bona fide local news program from a CBS affiliate in Hawaii.

Honestly, you could stop there. We’ve solved this riddle. The photographer was really on hot lava, which is impressive in itself, but used some accelerant (such as lighter fluid) to set his shoes and tripod on fire. Additionally, the photo was a stunt, and not part of any naturally occurring National Geographic shoot. We’ve traced the story back to its source, found the answer, and got confirmation on the authoritative nature of the source.

We’re sticklers for making absolutely sure of this, so we’re going to go upstream one more time, and click on the Wikipedia link to the article on the Google knowledge panel to make sure we aren’t missing anything. But I don’t have to make you watch that. I’ll tell you right now it will turn out fine.

In this case at least.

14

As I’ve mentioned above, going upstream is often a journey through time and space. The original story is also the first story, and as we saw with the Hawaiian news site, local sources often have special insights into stories.

There are specific tactics you can use with Google and other search engines to help you find original material more quickly.

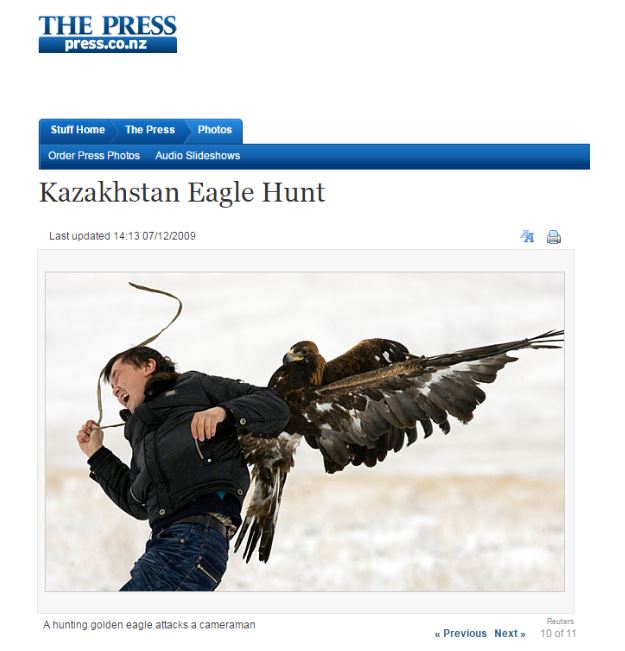

The following photo is another photo that Twitter users have identified as another “National Geographic photographer” photo. Is it?

A Google reverse image search finds the photo, suggesting the best search term is “birds attacking people.”

This suggestion is based on the fact that the pages where this photo shows up often contain these words: “birds attacking people.”

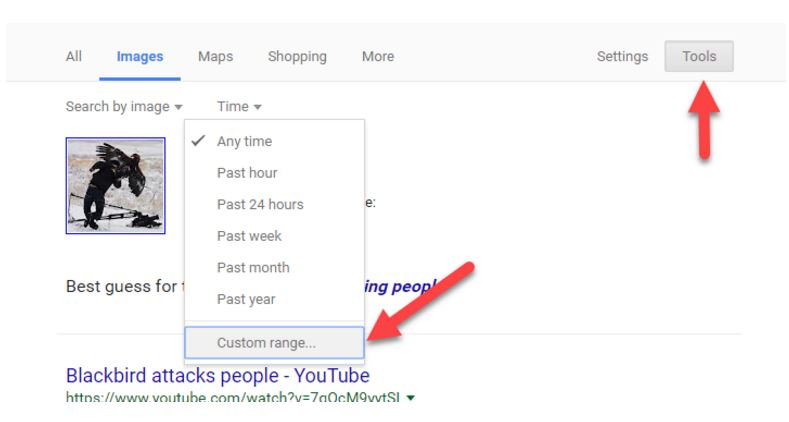

We can modify that search, however. Let’s return only the older pictures.

We do that by clicking the “Tools” button and then using the “Time” dropdown to select “Custom range.” This should filter out some of the posts that merely include this in slideshows.

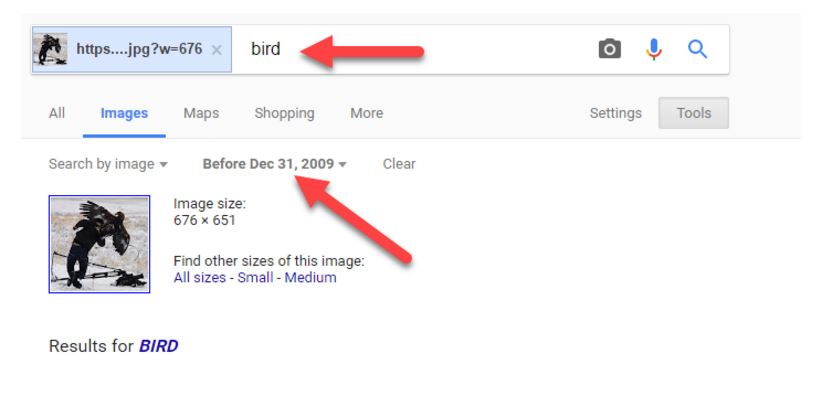

We pick a date in the past to see if we can filter out the newer photos. We remove the “birds attacking people” search and replace it with “bird,” since the other phrase sounds like a title for a slideshow with many of these sorts of photos in it. The original isn’t likely to be on a page like that; the slideshows come later in the viral cycle:

Why 2009? For viral photos I usually find 2009 or 2010 a good starting point. If you don’t find any results within that parameter, then go higher, to a year like 2012. If you find too many results, then change the search to something like 2007.

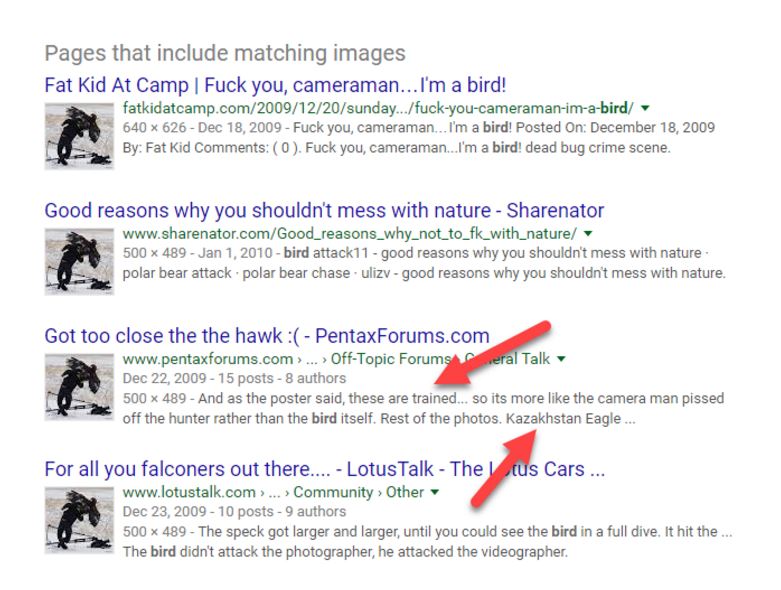

Here we get a much better set of results. Instead of a list of “When Birds Attack” slideshows, we get a set of results talking about this specific photo. One of the results stands out to me.

This third result looks most promising for two reasons:

Luckily when we go to that page it links us in the comments to a page that has the set of shots that the photographer was taking, as well as a shot of this cameraman being attacked from another angle.

It’s a series of photos from a hunting competition in Chengelsy Gorge, Kazakhstan. The eagle attacking him is tame and trained, but for some reason attacked him anyway. So this is real; it’s not photoshopped or staged. At the same time it’s not a National Geographic photographer. We could pursue it further if we wanted, but we’ll stop here.

While this process takes some time to explain, in practice it can be done in about 90 seconds. Here’s a YouTube video that shows what this looks like in practice.

(Note that as long as you are careful with confirmation bias, you can replace the search term “bird” with a term like “fake” to find pages claiming the image is fake and see what evidence they present.)

Going local is also useful for other sorts of events. Here is text from a story that ran in many right-wing blogs, under headlines such as “Teen Girls Savagely Beaten By Black Lives Matter Thugs”:

Two white teenage girls and their mother were attacked during the protests in Stockton last Friday. The young girls were transported to the hospital by police after being viciously beaten by Black Lives Matter supporters, but one of the attackers will soon face criminal charges for his role in the assault.

The two teenage girls said they were viciously attacked by more than a dozen male and female protesters as they were leaving a restaurant. As they were leaving the restaurant, they were approached by a group of protesters chanting “Black Lives Matter.”

The headlines and the language used in those posts were often inflammatory and racist, but is there really a story under this? Or is the story fake?

There are many ways we can investigate the story, but for a local event like this you would expect some local coverage. So to go upstream here, one option is to go local. In this case we look to see what news organizations cover the area, by typing in “stockton ca local affiliate”:

Then we go to one of those sites and look for the news, typing in “teenage girls black lives matter.”

And in doing that we find that the event did happen. But the facts, if you follow that link, are more complex than most of the tertiary coverage will convey.

There’s plenty to argue about concerning the event. But by going to the local source we can start with a cleaner version of the facts. This isn’t to say that local news is always reliable, but in a sea of spin and fakery, it’s not a bad place to start for coverage and confirmation of local events.

15

These two photos have been attributed to National Geographic shoots by the same tweeter I mentioned above.

I put the photos below. If you are reading this on the web, go to it. If you are reading this book in PDF form, you’ll have to go find them at the Hapgood blog to use your Google reverse image Search right-click/control-click action.

The first one is easy. Is this real, or fake? And are these National Geographic photographers or not? Is the bear real?

This second one is a lot harder. But is this real or fake? If real, can you find the name of the photographer in the swan and his nationality? If fake, can you show a debunking of it?

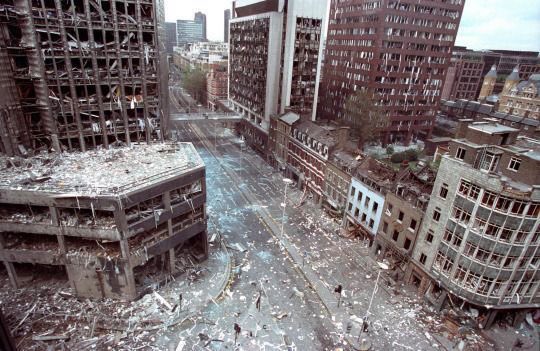

This next one is political. It was shared by a Twitter user who claimed it was a picture of an Irish Republican Army bombing. To paraphrase the poster: “This is London in 1993 after an IRA truck bomb. We didn’t ban Irish people or Catholics.” The poster making a comparison to recent moves to ban travel from Muslim countries in the U.S.

Is this a picture of a 1993 London truck bombing? If so, how many people died and/or were injured? What was the response?

IV

16

Time for our third move: good fact-checkers read “laterally,” across many connected sites instead of digging deep into the site at hand.

When you start to read a book, a journal article, or a physical newspaper in the “real world,” you already know quite a bit about your source. You’ve subscribed to the newspaper, or picked it up from a newsstand because you’ve heard of it. You’ve ordered the book from Amazon or purchased it from a local bookstore because it was a book you were interested in reading. You’ve chosen a journal article either because of the quality of the journal article or because someone whose expertise and background you know cited it. In other words, when you get to the document you need to evaluate, the process of getting there has already given you some initial bearings.

Compared to these intellectual journeys, web reading is a bit more like teleportation. Even after following a source upstream, you arrive at a page, site, and author that are often all unknown to you. How do you analyze the author’s qualifications or the trustworthiness of the site?

Researchers have found that most people go about this the wrong way. When confronted with a new site, they poke around the site and try to find out what the site says about itself by going to the “about page,” clicking around in onsite author biographies, or scrolling up and down the page. This is a faulty strategy for two reasons. First, if the site is untrustworthy, then what the site says about itself is most likely untrustworthy, as well. And, even if the site is generally trustworthy, it is inclined to paint the most favorable picture of its expertise and credibility possible.

The solution to this is, in the words of Sam Wineburg’s Stanford research team, to “read laterally.” Lateral readers don’t spend time on the page or site until they’ve first gotten their bearings by looking at what other sites and resources say about the source at which they are looking.

For example, when presented with a new site that needs to be evaluated, professional fact-checkers don’t spend much time on the site itself. Instead they get off the page and see what other authoritative sources have said about the site. They open up many tabs in their browser, piecing together different bits of information from across the web to get a better picture of the site they’re investigating. Many of the questions they ask are the same as the vertical readers scrolling up and down the pages of the source they are evaluating. But unlike those readers, they realize that the truth is more likely to be found in the network of links to (and commentaries about) the site than in the site itself.

Only when they’ve gotten their bearings from the rest of the network do they re-engage with the content. Lateral readers gain a better understanding as to whether to trust the facts and analysis presented to them.

You can tell lateral readers at work: they have multiple tabs open and they perform web searches on the author of the piece and the ownership of the site. They also look at pages linking to the site, not just pages coming from it.

Lateral reading helps the reader understand both the perspective from which the site’s analyses come and if the site has an editorial process or expert reputation that would allow one to accept the truth of a site’s facts.

We’re going to deal with the latter issue of factual reliability, while noting that lateral reading is just as important for the first issue.

17

Authority and reliability are tricky to evaluate. Whether we admit it or not, most of us would like to ascribe authority to sites and authors who support our conclusions and deny authority to publications that disagree with our worldview. To us, this seems natural: the trustworthy publications are the ones saying things that are correct, and we define “correct” as what we believe to be true. A moment’s reflection will show the flaw in this way of thinking.

How do we get beyond our own myopia here? For the Digital Polarization Project for which this text was created, we ended up adopting Wikipedia’s guidelines for determining the reliability of publications. These guidelines were developed to help people with diametrically opposed positions argue in rational ways about the reliability of sources using common criteria.

For Wikipedians, reliable sources are defined by process, aim, and expertise. I think these criteria are worth thinking about as you fact-check.

Above all, a reliable source for facts should have a process in place for encouraging accuracy, verifying facts, and correcting mistakes. Note that reputation and process might be apart from issues of bias: the New York Times is thought by many to have a center-left bias, the Wall Street Journal a center-right bias, and USA Today a centrist bias. Yet fact-checkers of all political stripes are happy to be able to track a fact down to one of these publications since they have reputations for a high degree of accuracy, and issue corrections when they get facts wrong.

The same thing applies to peer-reviewed publications. While there is much debate about the inherent flaws of peer review, peer review does get many eyes on data and results. Their process helps to keep many obviously flawed results out of publication. If a peer-reviewed journal has a large following of experts, that provides even more eyes on the article, and more chances to spot flaws. Since one’s reputation for research is on the line in front of one’s peers, it also provides incentives to be precise in claims and careful in analysis in a way that other forms of communication might not.

According to Wikipedians, researchers and certain classes of professionals have expertise, and their usefulness is defined by that expertise. For example, we would expect a marine biologist to have a more informed opinion about the impact of global warming on marine life than the average person, particularly if they have done research in that area. Professional knowledge matters too: we’d expect a health inspector to have a reasonably good knowledge of health code violations, even if they are not a scholar of the area. And while we often think researchers are more knowledgeable than professionals, this is not always the case. For a range of issues, professionals in a given area might have better insight than researchers, especially where question deal with common practice.

Reporters, on the other hand, often have no domain expertise, but may write for papers that accurately summarize and convey the views of experts, professionals, and event participants. As reporters write in a niche area over many years (e.g. opioid drug policy) they may acquire expertise themselves.

Aim is defined by what the publication, author, or media source is attempting to accomplish. Aims are complex. Respected scientific journals, for example, aim for prestige within the scientific community, but must also have a business model. A site like the New York Times relies on ad revenue but is also dependent on maintaining a reputation for accuracy.

One way to think about aim is to ask what incentives an article or author has to get things right. An opinion column that gets a fact or two wrong won’t cause its author much trouble, whereas an article in a newspaper that gets facts wrong may damage the reputation of the reporter. On the far ends of the spectrum, a single bad or retracted article by a scientist can ruin a career, whereas an advocacy blog site can twist facts daily with no consequences.

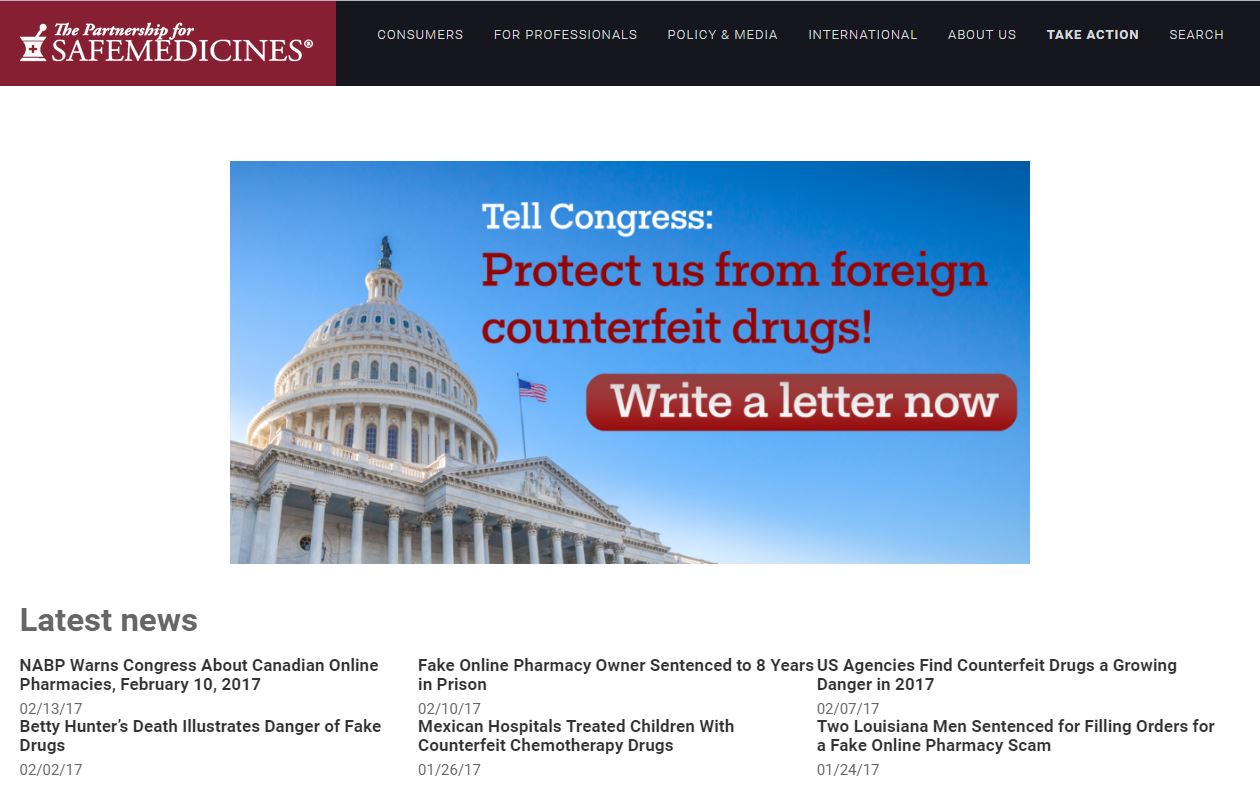

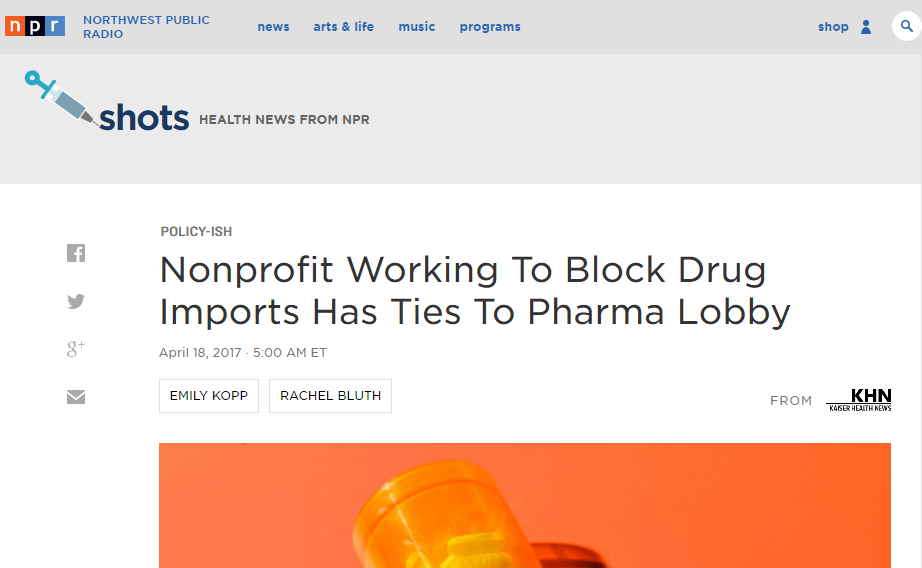

Policy think tanks, such as the Cato Institute and the Center for American Progress, are interesting hybrid cases. To maintain their funding, they must continue to promote aims that have a particular bias. At the same time, their prestige (at least for the better known ones) depends on them promoting these aims while maintaining some level of honesty.

In general, you want to choose a publication that has strong incentives to get things right, as shown by both authorial intent and business model, reputational incentives, and history.

18

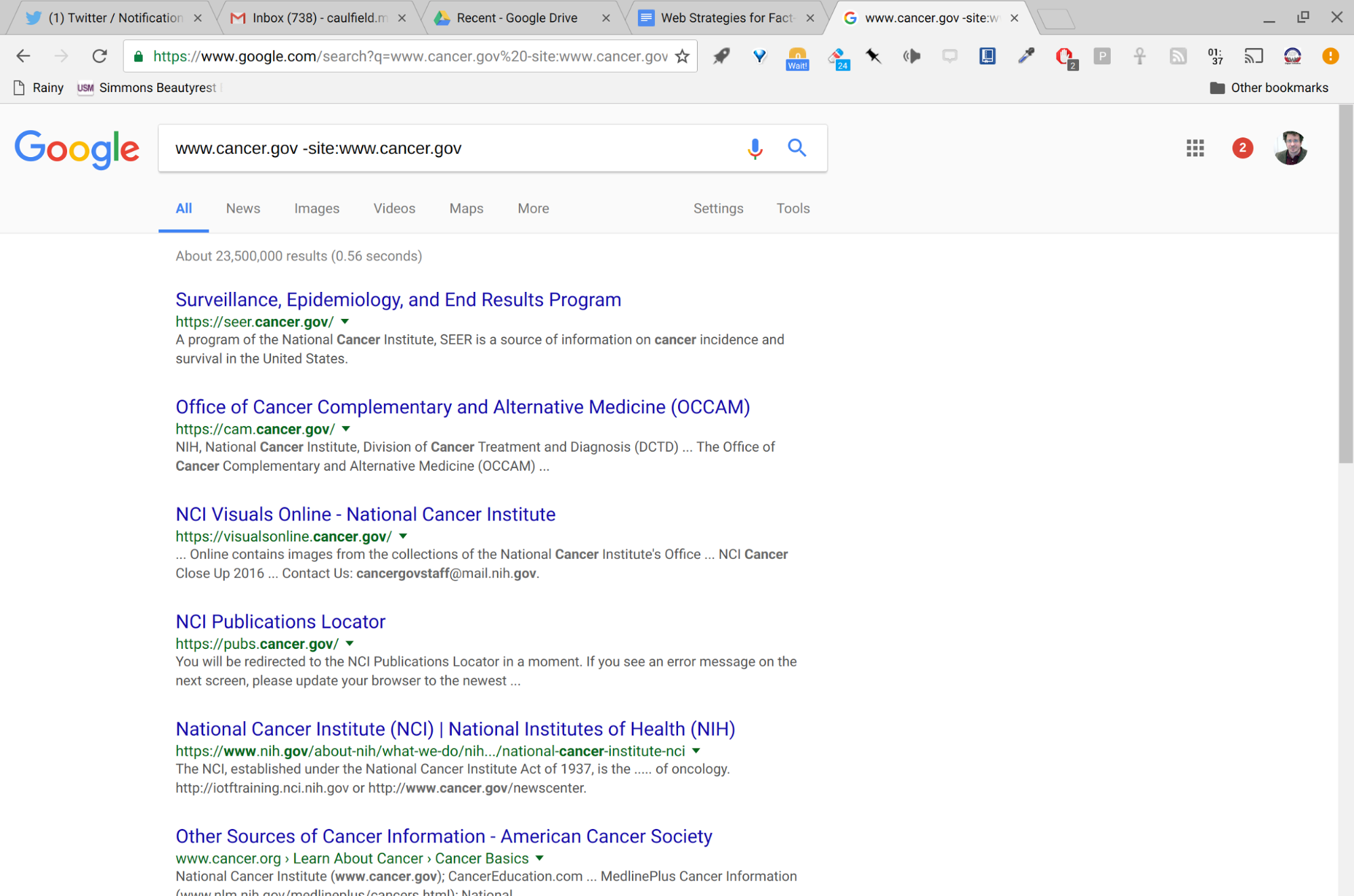

What are some quick techniques to identify an unfamiliar site’s worldview, process, aims, and expertise?

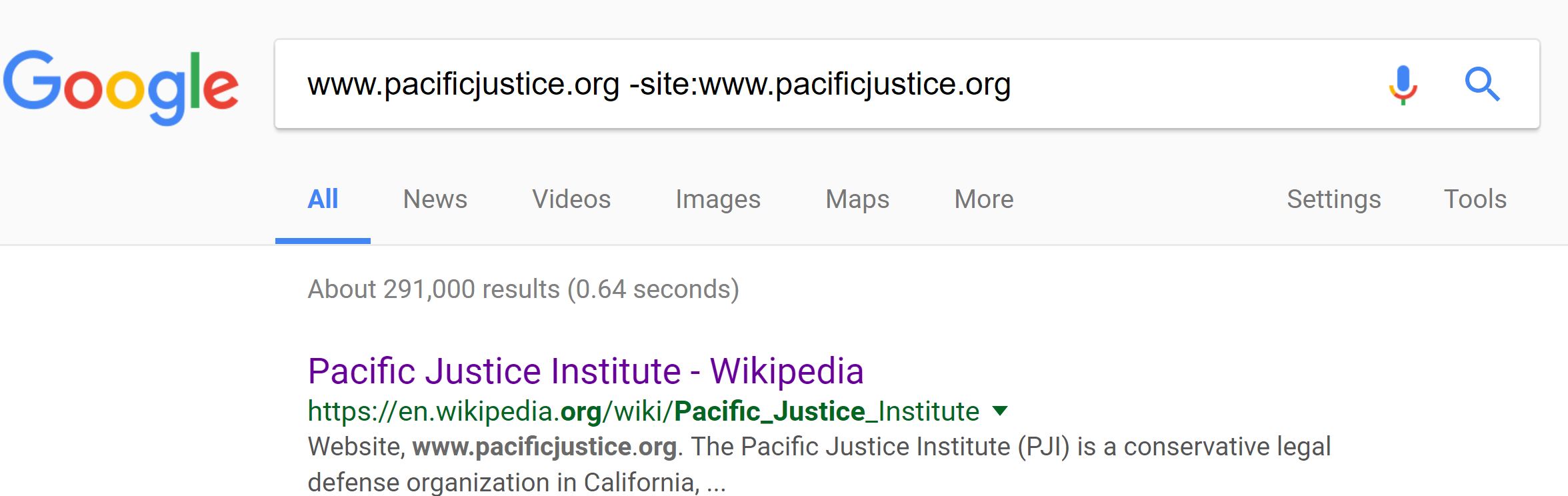

The simplest and quickest way to get a sense of where a site sits in the network ecosystem is to Google search the site. Since we want to find out what other sites are saying about the site while excluding what the site says about itself, we use a special search syntax that excludes pages from the target site.

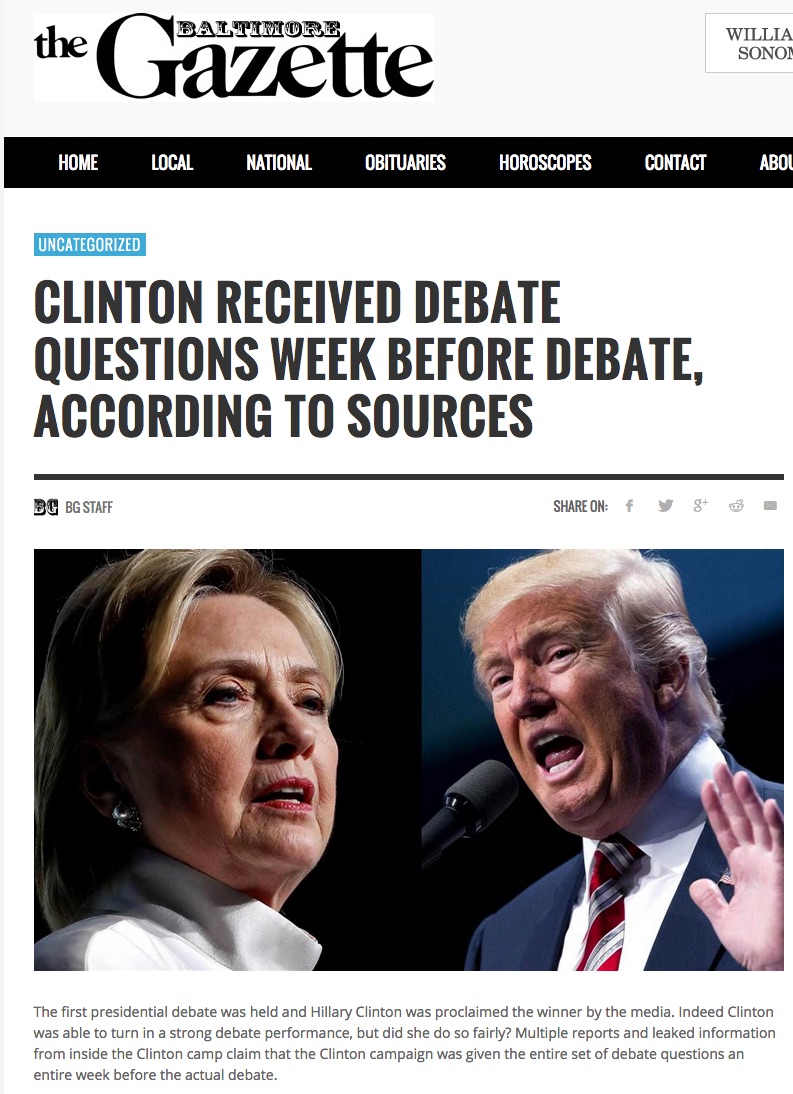

For example, say we are looking at an article in the Baltimore Gazette:

Is this a reputable newspaper?

The site is down right now, but when it was up, a search for “baltimoregazette.com” would have returned many pages, mostly from the site itself. As noted earlier, if we don’t know whether to trust a site, it doesn’t make much sense to trust the story the site tells us about itself.

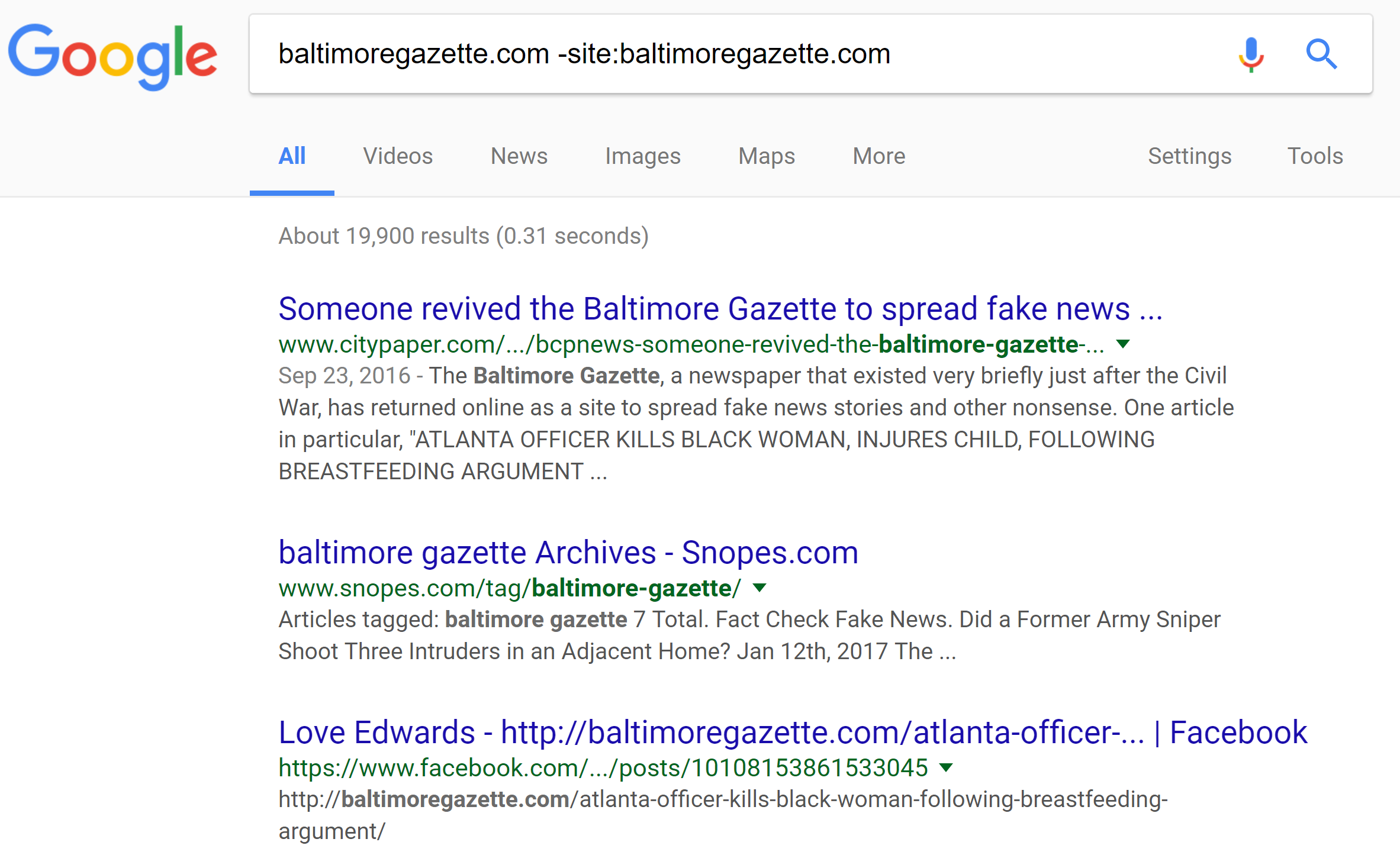

So we use a search syntax that looks for all references to the site that are not on the site itself:

baltimoregazette.com -site:baltimoregazette.com

When we do that we get a set of results that we can scan, looking for sites we trust:

These results, as we scan them, give us reason to suspect the site. Maybe we don’t know “City Paper,” which claims the site is fake. But we do know Snopes. When we take a look there, we find the following sentence about the Gazette:

On 21 September 2016, the Baltimore Gazette — a purveyor of fake news, not a real news outlet — published an article reporting that any “rioters” caught looting in Charlotte would permanently lose food stamps and all other government benefits…

From Snopes, that’s pretty definitive. This is a fake news site.

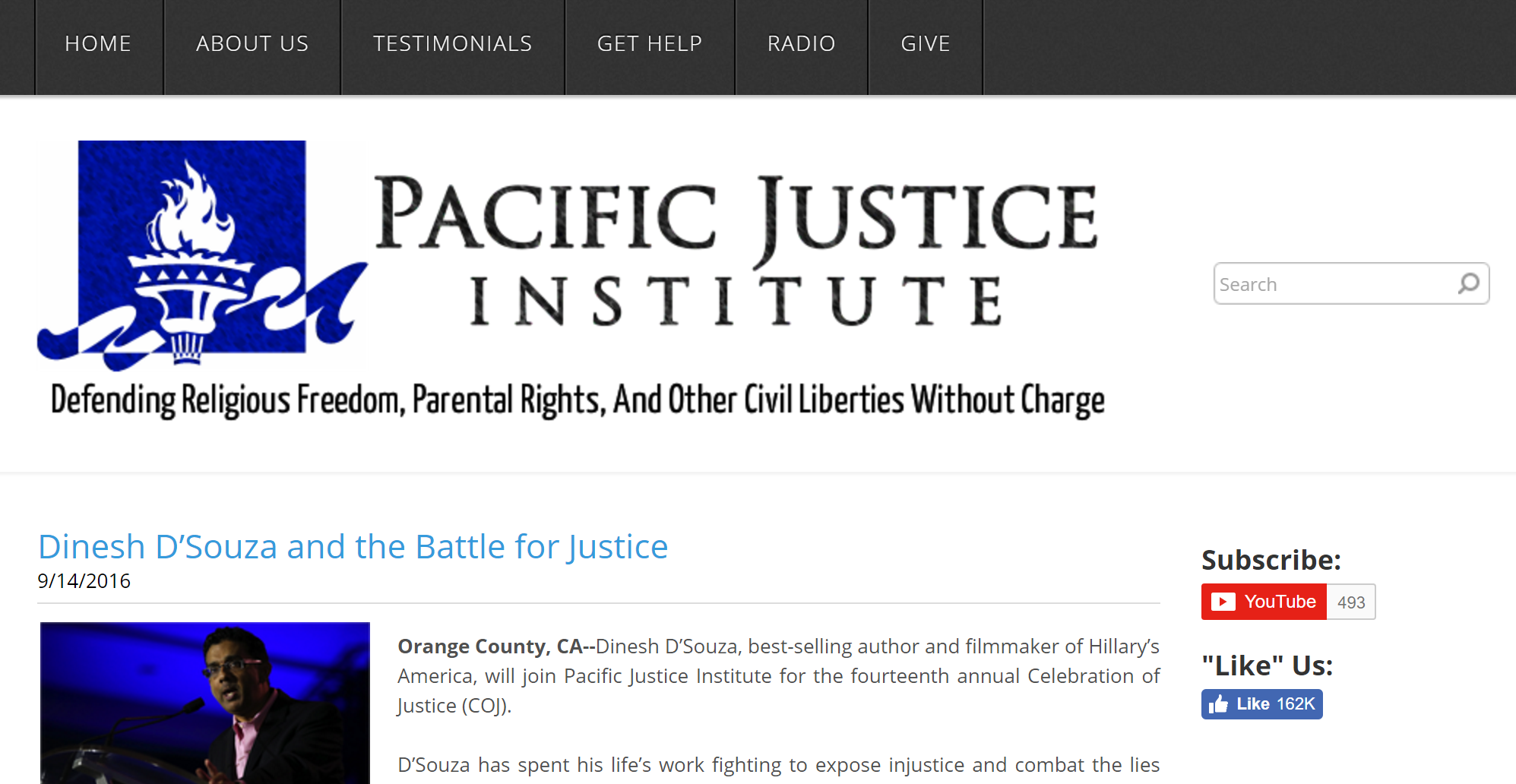

Searches like this don’t always turn up Snopes or Politifact. Here’s the site of the Pacific Justice Institute:

Here, a search of Google turns up a Wikipedia article:

That article explains that this is a conservative legal defense fund that has been named a hate site by the Southern Poverty Law Center.

Maybe to you that means that nothing from this site is trustworthy; maybe to another person it simply means proceed with caution. But after a short search and two clicks, you can begin reading an article from this site with a better idea of the purpose behind it, a key ingredient of intentional reading.

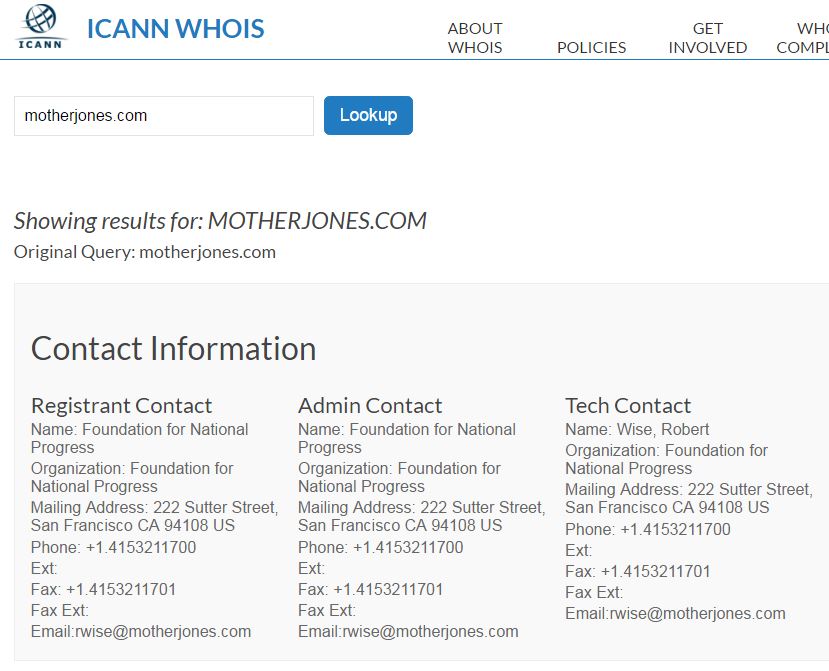

Some smaller sites don’t have reliable commentary around them. For these sites, using WHOIS to find who owns them may be a useful move.

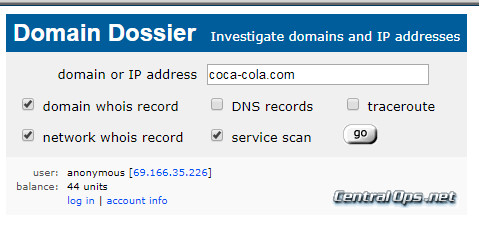

WHOIS gets you information about who is the administrator of the site domain. It can be done from your computer’s command line in many cases, but here we’ll show the ICANN interface, where we are searching to see who owns Mother Jones, an online news site:

When we search on the owner, we find that:

The Foundation for National Progress is a nonprofit organization created to educate the American public by publishing Mother Jones. Mother Jones is a multiplatform news organization that conducts in-depth investigative reporting and high quality, original, explanatory journalism on major social issues, including money in politics, gun violence, economic inequality and the future of work.

(We could have found this out by other means as well, of course).

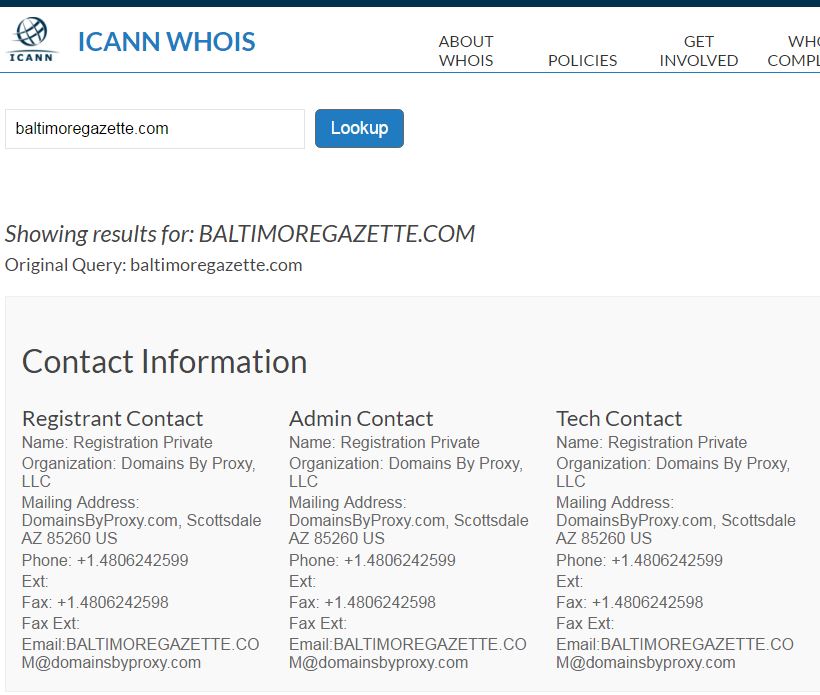

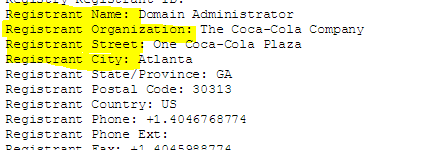

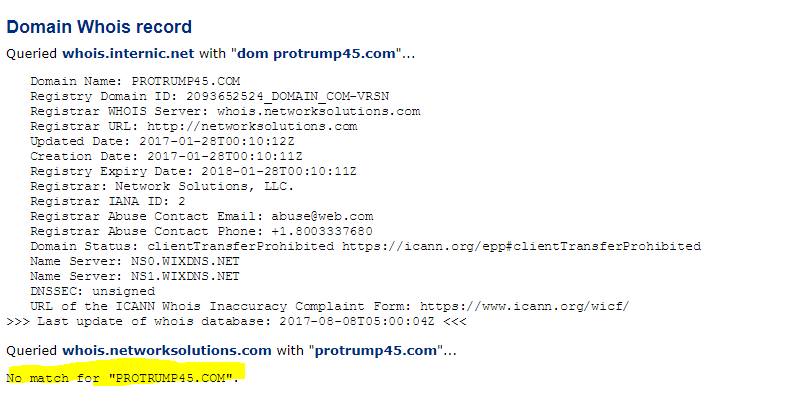

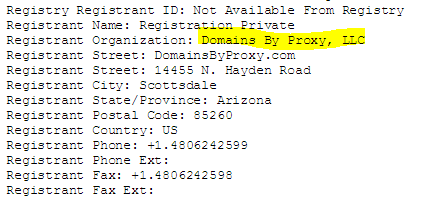

Unfortunately, WHOIS blockers have dramatically reduced the value of WHOIS searches. The famous Baltimore Gazette fake news site from 2016, for example, uses a proxy service to hide revealing information.

The owner of the site here isn’t Domains by Proxy, as the record indicates. Instead, Domains by Proxy is a service, often available for a couple dollars a year, that obscures the true ownership of the site. These masking services are starting to become the norm, dramatically reducing the usefulness of WHOIS searches.

That said, there is still useful information to be had here, particularly in the date the baltimoregazette.com domain was registered, which is listed here as being in mid-2015:

If this were an established local paper, it would be fairly odd for it to have first registered the site a year ago.

19

Evaluate the reputations of the following sites by “reading laterally.” Answer the following questions to determine the reputability of each site: Who runs them? To what purpose? What is their history of accuracy, and how do they rate on process, aim, and expertise?

20

There’s no more dreaded phrase to the fact-checker than “a recent study says.” Recent studies say that chocolate cures cancer, prevents cancer, and may have no impact on cancer whatsoever. Recent studies say that holding a pencil in your teeth makes you happier. Recent studies say that the scientific process is failing, and others say it is just fine.

Most studies are data points–emerging evidence that lends weight to one conclusion or another but does not resolve questions definitively. What we want as a fact-checker is not data points, but the broad consensus of experts. And the broad consensus of experts is rare.

The following chapters are not meant to show you how to meticulously evaluate research claims. Instead, they are meant to give you, the reader, some quick and frugal ways to decide what sorts of research can be safely passed over when you are looking for a reliable source. We take as our premise that information is abundant and time is scarce. As such, it’s better to err on the side of moving onto the next article than to invest time in an article that displays warning signs regarding either expertise or accuracy.

21

I mentioned earlier that this process is one of elimination. In a world where information is plentiful, we can be a bit demanding about what counts as evidence. When it comes to research, one gating expectation can be that published academic research cited for a claim comes from respected peer-reviewed journals.

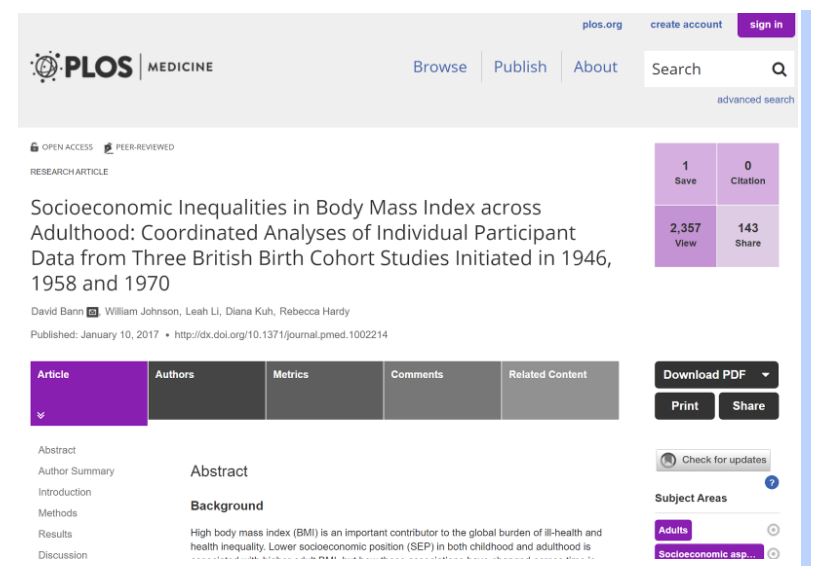

Consider this journal:

Is it a journal that gives any authority to this article? Or is it just another web-based paper mill?

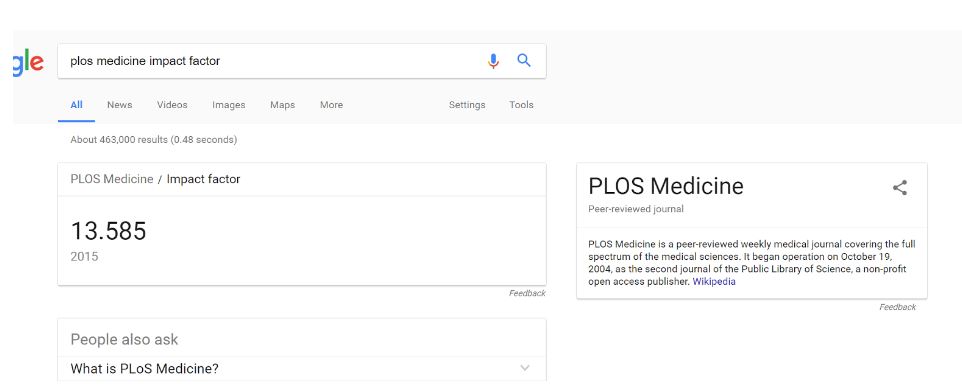

Our first check is to see what the “impact factor” of the journal is. This is a measure of the journal’s influence in the academic community. While a flawed metric for assessing the relative importance of journals, it is a useful tool for quickly identifying journals that are not part of a known circle of academic discourse, or that are not peer-reviewed.

We search Google for PLOS Medicine, and it pulls up a knowledge panel for us with an impact factor.

Impact factor can go into the 30s, but we’re using this as a quick elimination test, not a ranking, so we’re happy with anything over 1. We still have work to do on this article, but it’s worth keeping in the mix.

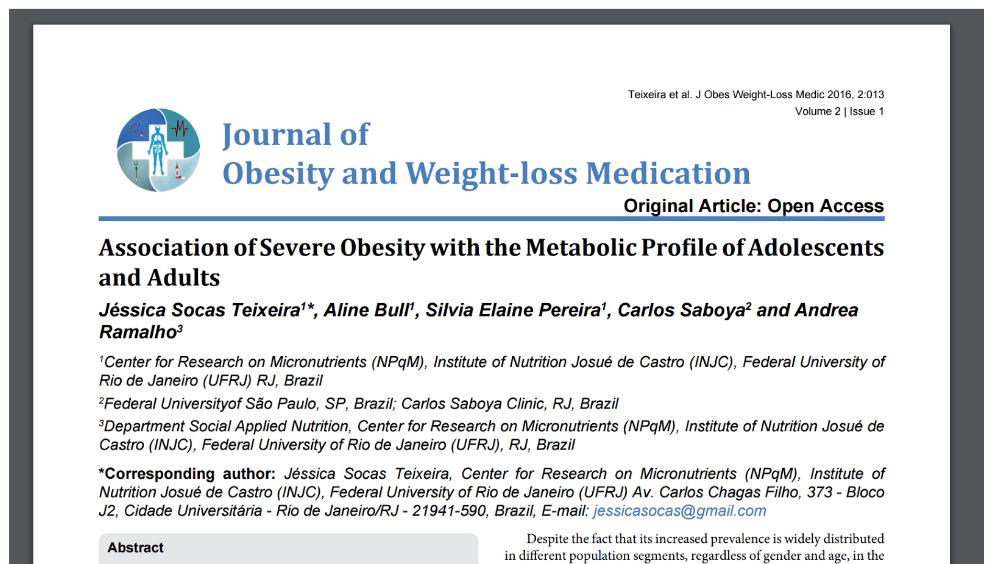

What about this one?

In this case we get a result with a link to this journal at the top, but no panel, as there is no registered impact factor for this journal:

Again, we stress that the article here may be excellent–we don’t know. Likewise, there are occasionally articles published in the most prestigious journals that are pure junk. Be careful in your use of impact factor; a journal with an impact factor of 10 is not necessarily better than a journal with an impact factor of 3, especially if you are dealing with a niche subject.

But in a quick and dirty analysis, we have to say that the PLOS Medicine article is more trustworthy than the Journal of Obesity and Weight-loss Medication article. In fact, if you were deciding whether to reshare a story in your feed and the evidence for the story came from this Obesity journal, I’d skip reposting it entirely.

22

Not all, or even most, expertise is academic. But when the expertise cited is academic, scholarly publications by the researcher can go a long way to establishing their position in the academic community.

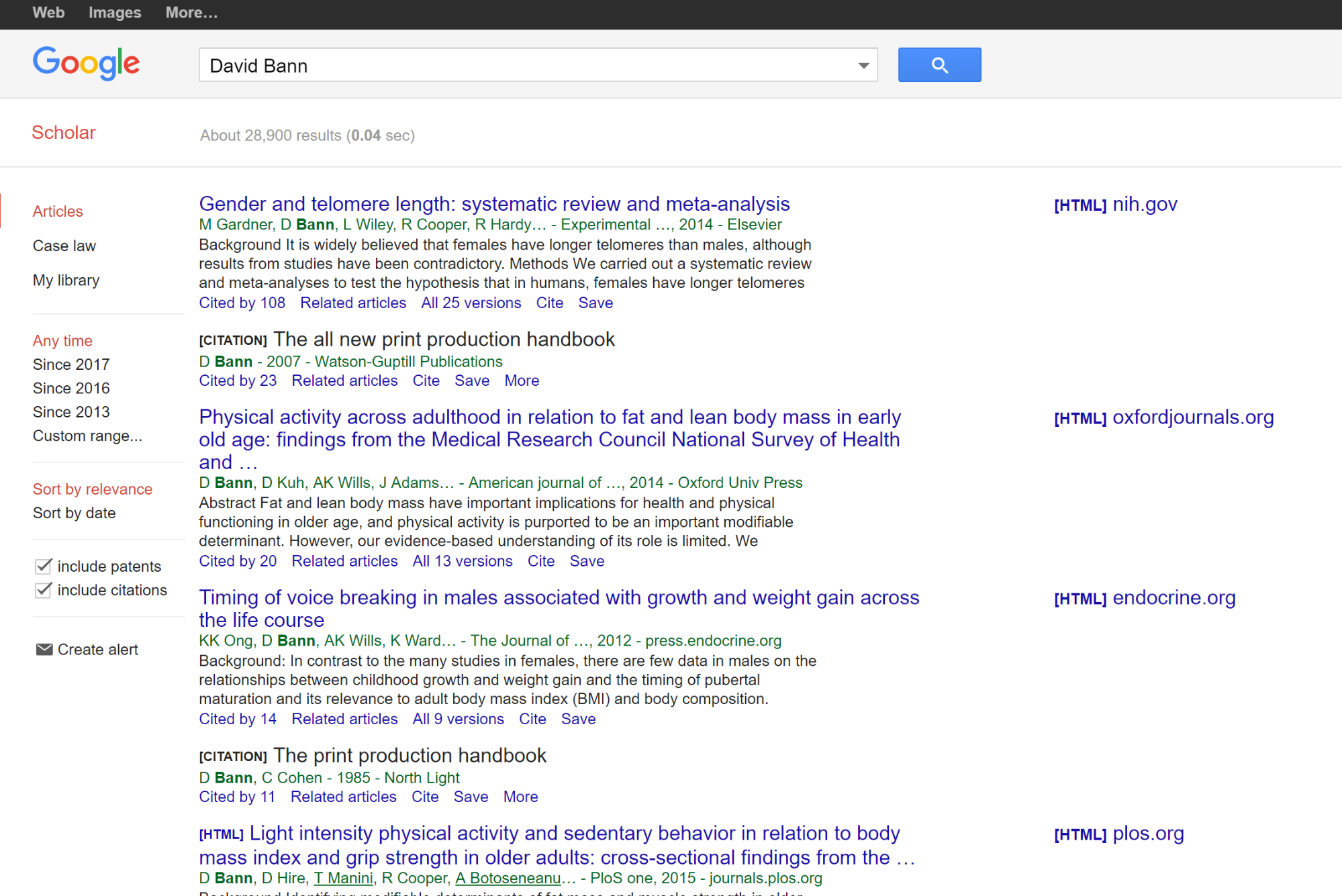

Let’s look at David Bann, who wrote the PLOS Medicine article we looked at a chapter ago. To do that we go to Google Scholar (not the general page) and type in his name.

We see a couple things here. First, he has a history of publishing in this area of lifespan obesity patterns. At the bottom of each result we see how many times each article he is associated with is cited. These aren’t amazing numbers, but for a niche area they are a healthy citation rate. Many articles published aren’t cited at all, and here at least one work of his has over 100 citations.

Additionally if we scan down that right side column we see some names we might recognize–the National Institutes of Health (NIH) and another PLOS article.

Keep in mind that we are looking for expertise in the area of the claim. These are great credentials for talking about obesity. They are not great credentials for talking about opiate addiction. But right now we care about obesity, so that’s OK.

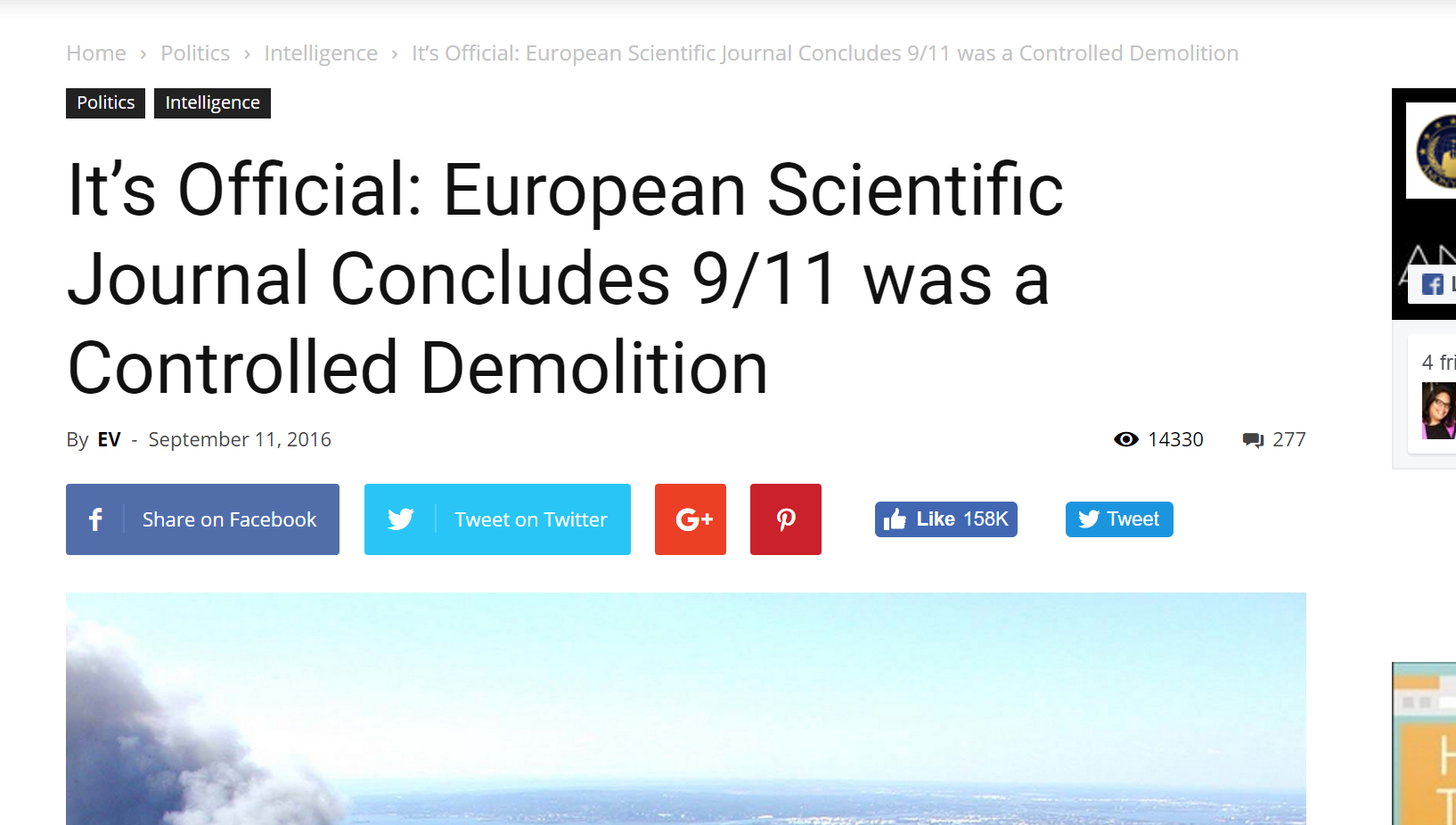

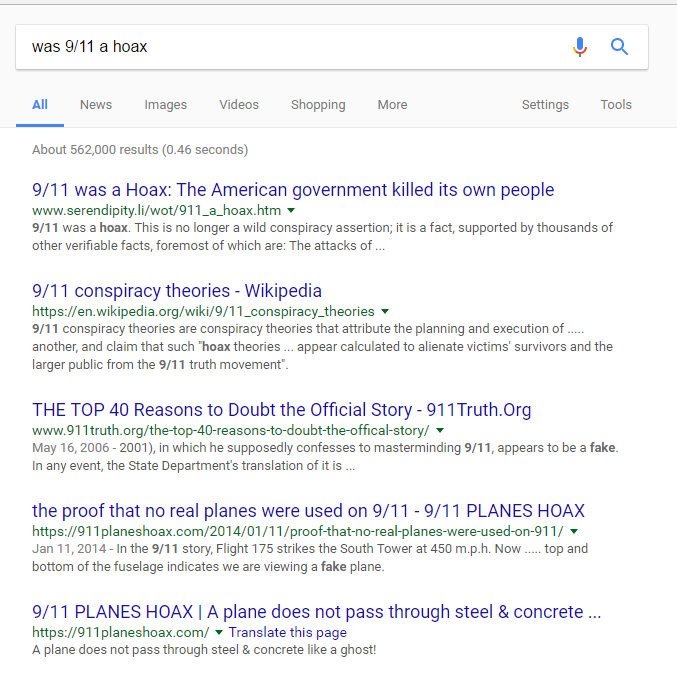

By point of comparison, we can look at a publication in Europhysics News that attacks the standard view of the 9/11 World Trade Center collapse. We see this represented in this story on popular alternative news and conspiracy site AnonHQ:

The journal cited is Europhysics News, and when we look it up in Google we find no impact factor at all. In fact, a short investigation of the journal reveals it is not a peer-reviewed journal, but a magazine associated with the European Physics Society. The author here is either lying, or does not understand the difference between a scientific journal and a scientific organization’s magazine.

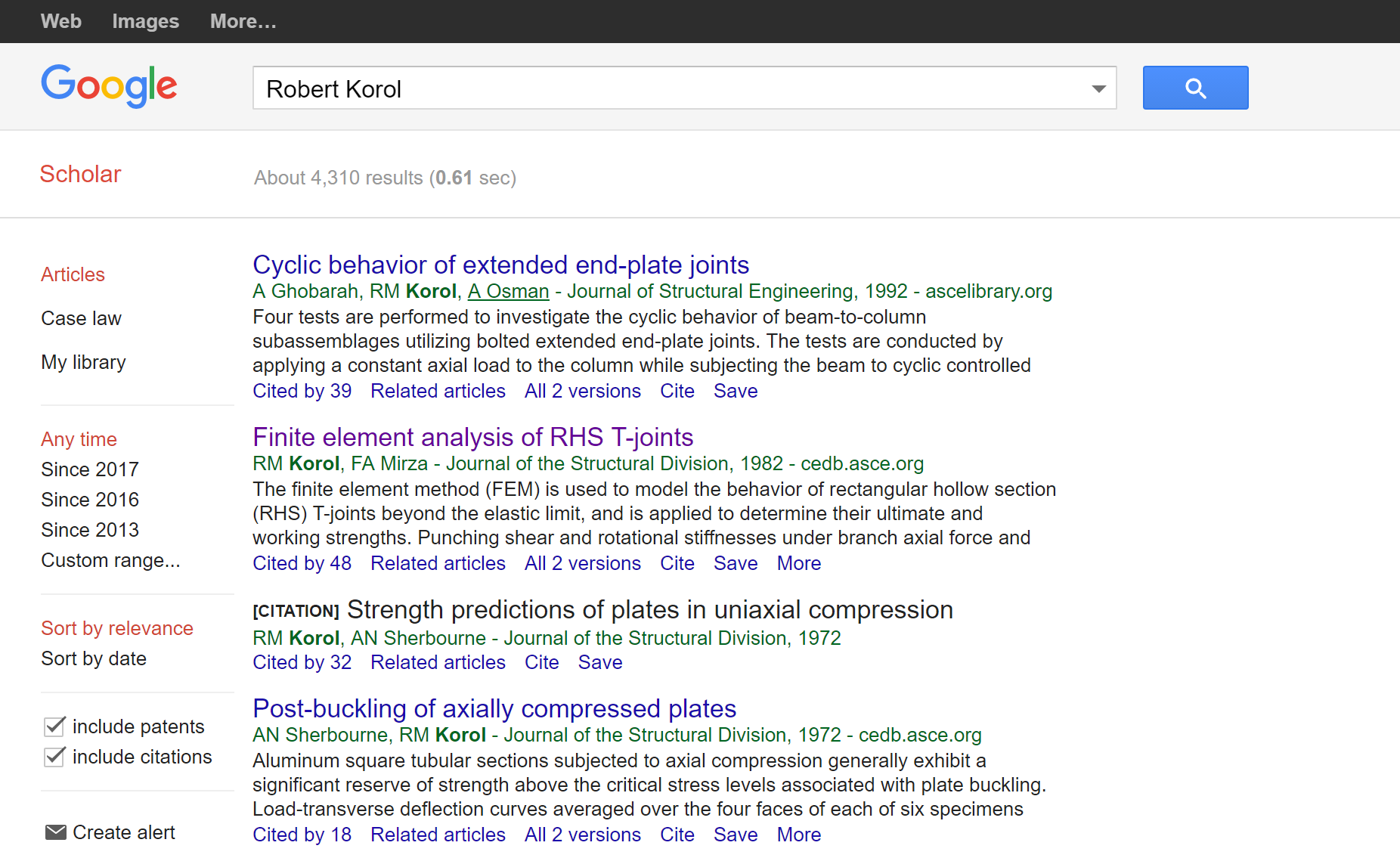

So much for the source. But what about the authors? Do they have a variety of papers on the mathematical modeling of building demolitions?

If you punch the names into Google Scholar, you’ll find that at least one of the authors does have some modelling experience on architectural stresses, although most of his published work was from years ago.

What do we make of this? It’s fair to say that the article here was not peer-reviewed and shouldn’t be treated as a substantial contribution to the body of research on the 9/11 collapse. The headline of the blog article that brought us here is wrong, as is their claim that a European Scientific Journal concluded 9/11 was a controlled demolition. That’s flat out false.

But it’s worthwhile to note that at least one of the people writing this paper does have some expertise in a related field. We’re left with that question of “What does generally mean?” in the phrase “Experts generally agree on X.”

What should we do with this article? Well, it’s an article published in a non-peer-reviewed journal by an expert who published a number of other respected articles (though quite a long time ago, in one case). To an expert, that definitely could be interesting. To a novice looking for the majority and significant minority views of the field, it’s probably not the best source.

23

This brings us to my third point, which is how to think about research articles. People tend to think that newer is better with everything. Sometimes this is true: new phones are better than old phones and new textbooks are often more up-to-date than old textbooks. But the understanding many students have about scholarly articles is that the newer studies “replace” the older studies. You see this assumption in the headline: “It’s Official: European Scientific Journal Concludes…”

In general, that’s not how science works. In science, multiple conflicting studies come in over long periods of time, each one a drop in the bucket of the claim it supports. Over time, the weight of the evidence ends up on one side or another. Depending on the quality of the new research, some drops are bigger than others (some much bigger), but overall it is an incremental process.

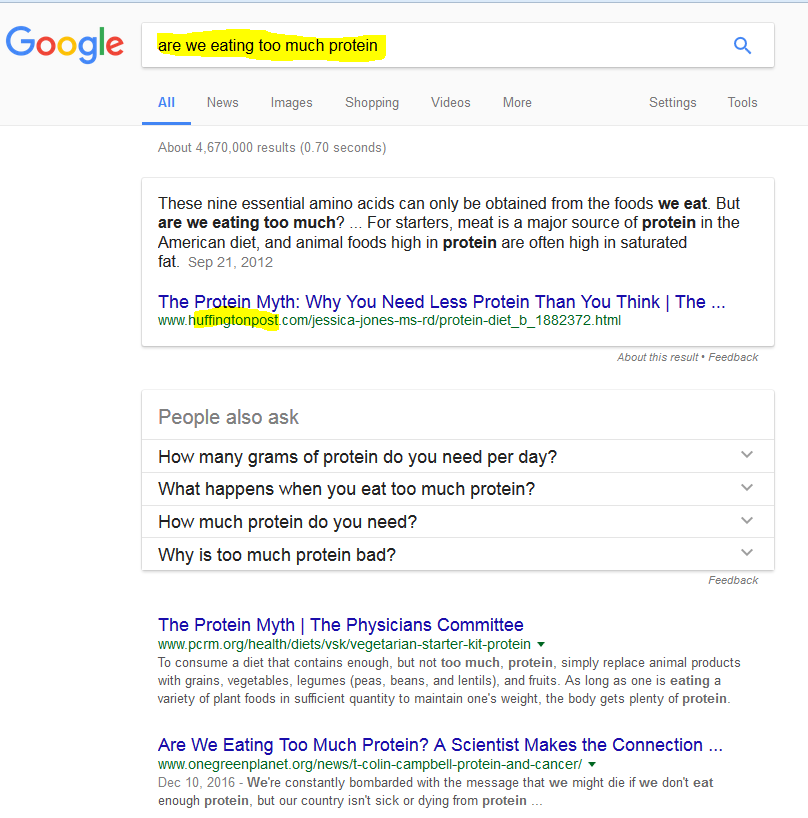

As such, studies that are consistent with previous research are often more trustworthy than those that have surprising or unexpected results. This runs counter to the narrative promoted by the press: “news,” after all, favors what is new and different. The unfortunate effect of the press’s presentation of science (and in particular science around popular issues such as health) is that they would rather not give a sense of the slow accumulation of evidence for each side of an issue. Their narrative often presents a world where last month’s findings are “overturned” by this month’s findings, which are then, in turn, “overturned” back to the original finding a month from now. This whiplash presentation “Chocolate is good for you! Chocolate is bad for you!” undermines the public’s faith in science. But the whiplash is not from science: it is a product of the inappropriate presentation from the press.

As a fact-checker, your job is not to resolve debates based on new evidence, but to accurately summarize the state of research and the consensus of experts in a given area, taking into account majority and significant minority views.

For this reason, fact-checking communities such as Wikipedia discourage authors from over-citing individual research, which tends to point in different directions. Instead, Wikipedia encourages users to find high quality secondary sources that reliably summarize the research base of a certain area, or research reviews of multiple works. This is good advice for fact-checkers as well. Without an expert’s background, it can be challenging to place new research in the context of old, which is what you want to do.

Here’s a claim (two claims, actually) that ran recently in the Washington Post:

The alcohol industry and some government agencies continue to promote the idea that moderate drinking provides some health benefits. But new research is beginning to call even that long-standing claim into question.

Reading down further, we find a more specific claim: the medical consensus is that alcohol is a carcinogen even at low levels of consumption. Is this true?

The first thing we do is look at the authorship of the article. It’s from the Washington Post, which is a generally reliable publication, and one of its authors has made a career of data analysis (and actually won a Pulitzer prize as part of a team that analyzed data and discovered election fraud in a Florida mayoral race). So one thing to think about is that these people may be better interpreters of the data than you. (Key thing for fact-checkers to keep in mind: You are often not a person in a position to know.)

But suppose we want to dig further and find out if they are really looking at a shift in the expert consensus, or just adding more drops to the evidence bucket. How would we do that?

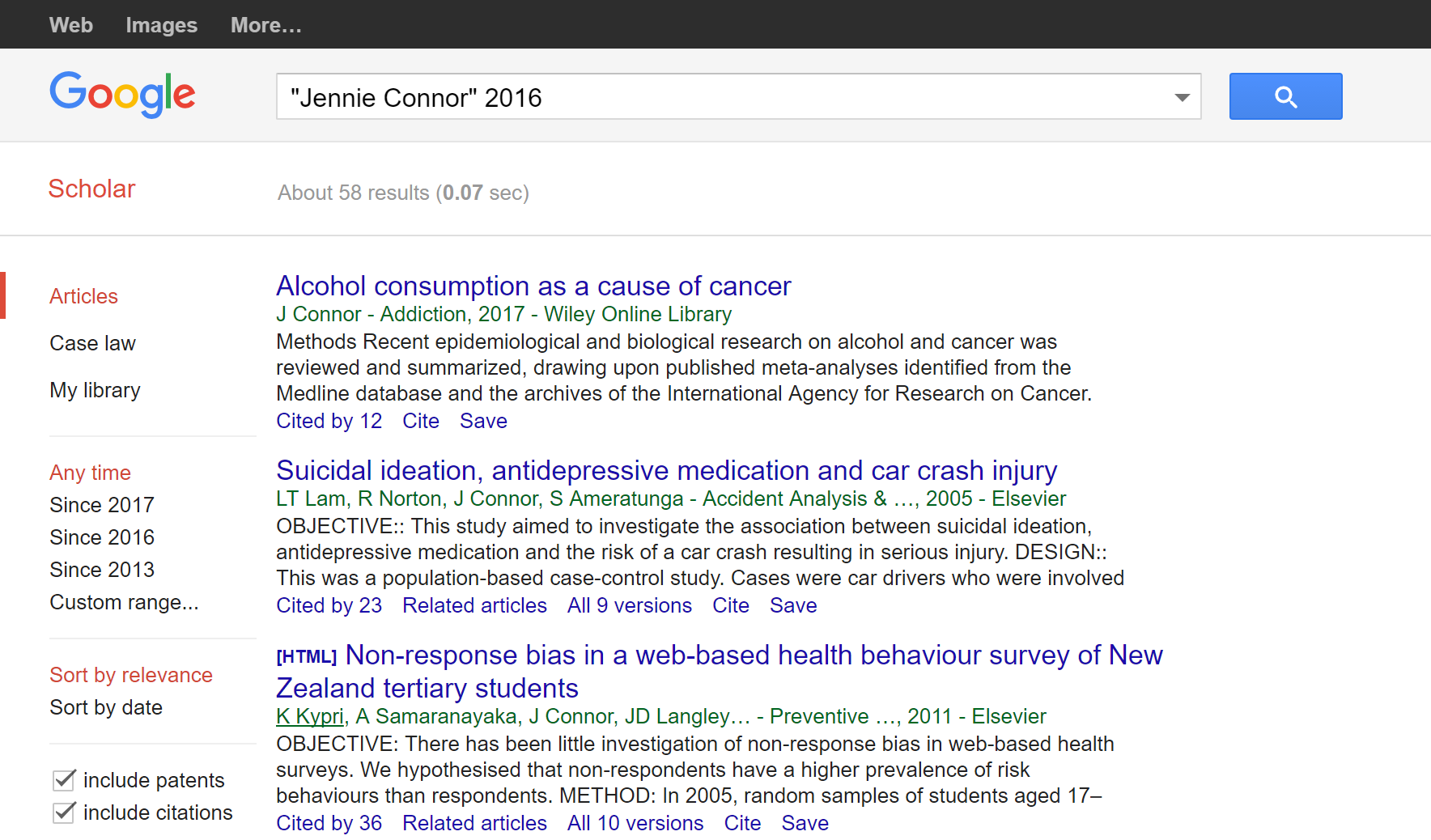

First, we’d sanity check where the pieces they mention were published. The Post article mentions two articles by “Jennie Connor, a professor at the University of Otago Dunedin School of Medicine,” one published last year and the other published earlier. Let’s find the more recent one, which seems to be a key input into this article. We go to Google Scholar and type in “‘Jennie Connor’ 2016”:

As usual, we’re scanning quickly to get to the article we want, but also minding our peripheral vision here. So, we see that the top one is what we probably want, but we also notice that Connor has other well-cited articles in the field of health.

What about this article on “Alcohol consumption as a cause of cancer”? It was published in 2017 (which is probably the physical journal’s publication date, the article having been released in 2016). Nevertheless, it’s already been cited by twelve other papers.

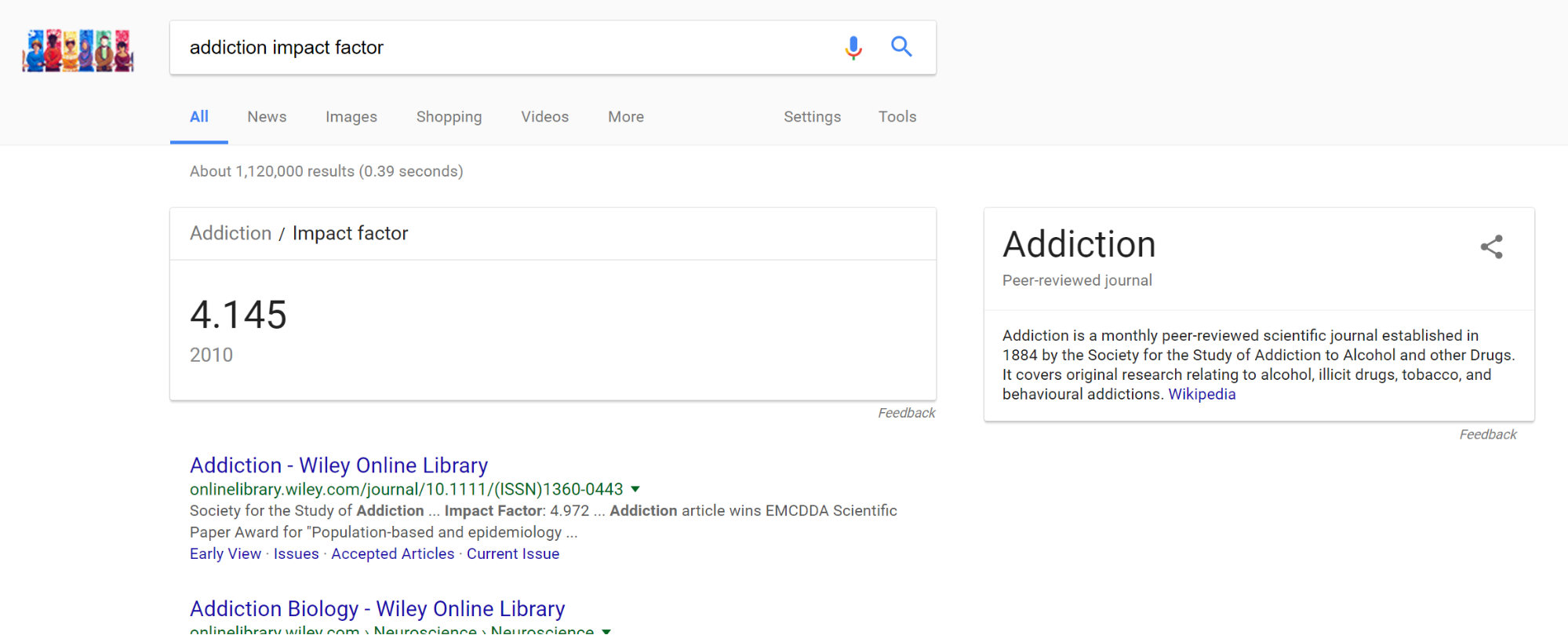

What about this publication Addiction? Is it reputable?

Let’s take a look with an impact factor search.

Yep, it looks legit. We also see in the knowledge panel to the right that the journal was founded in the 1880s. If we click through to that Wikipedia article, it will tell us that this journal ranks second in impact factor for journals on substance abuse.

Again, you should never use impact factor for fine-grained distinctions. What we’re checking for here is that the Washington Post wasn’t fooled into covering some research far out of the mainstream of substance abuse studies, or tricked into covering something published in a sketchy journal. It’s clear from this quick check that this is a researcher well within the mainstream of her profession, publishing in prominent journals.

Next we want to see what kind of article this is. Sometimes journals publish short reactions to other works, or smaller opinion pieces. What we’d like to see here is that this was either new research or a substantial review of research. We find from the abstract that it is primarily a review of research, including some of the newer studies. We note that it is a six-page article, and therefore not likely to be a simple letter or response to another article. The abstract also goes into detail about the breadth of evidence reviewed.

Frustratingly, we can’t get our hands on the article, but this probably tells us enough about it for our purposes.

24

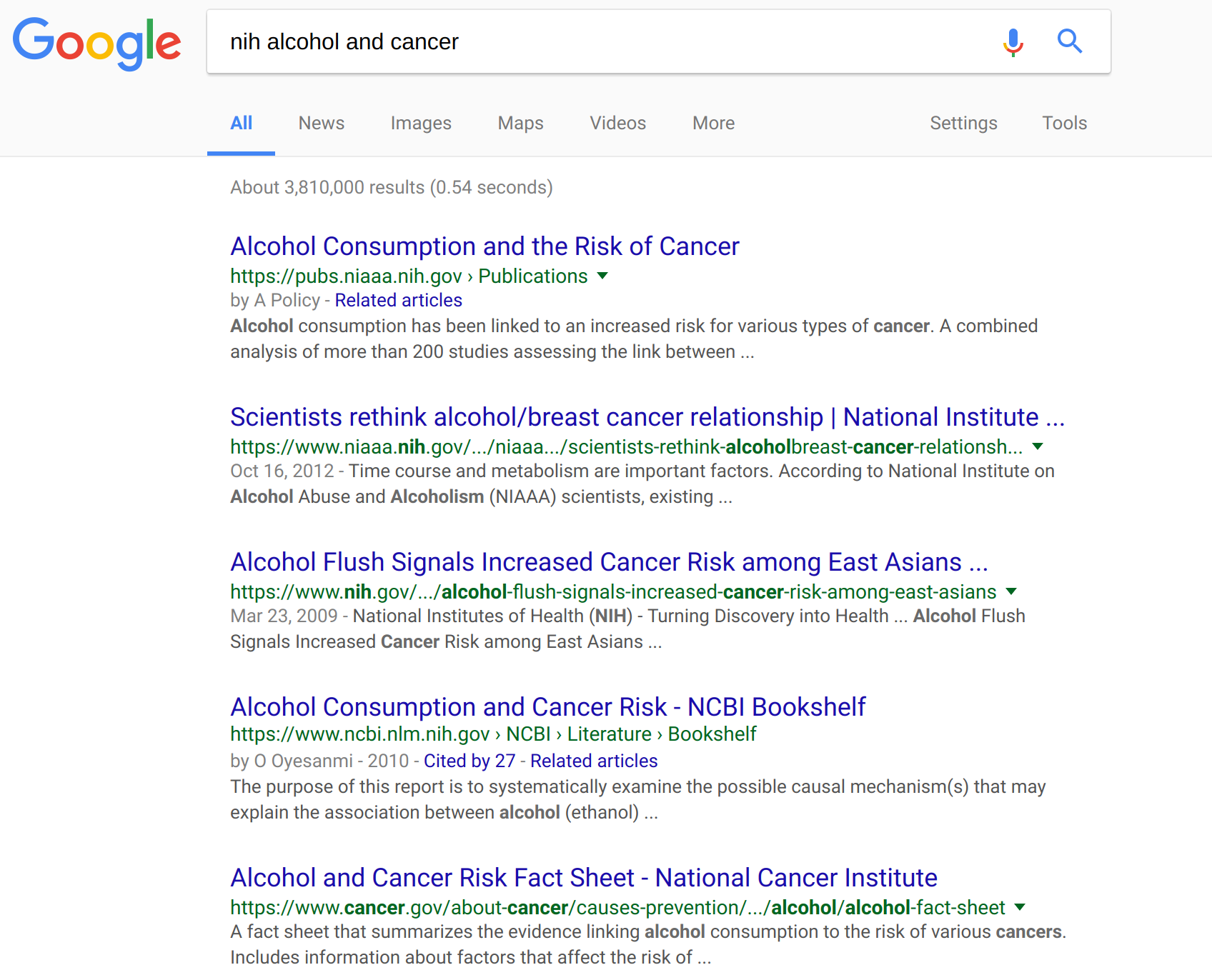

Let’s continue with the “alcohol is closely associated with cancer” claim from the last chapter. Let’s see if we can get a decent summary from a respected organization that deals with these issues.

This takes a bit of domain knowledge, but for information on disease, the United States’s National Institutes of Health (NIH) is considered one of the leading authorities. What do they say about this issue?

What we don’t want here is a random article. We’re not an expert and we don’t want to have to guess at the weights to give individual research. We want a summary.

And as we scan the results we see a “risk fact-sheet” from the National Cancer Institute. In general, domain suffixes (com/org/net/etc) don’t mean anything, but “.gov” domains are strictly regulated, so we know this is from the (U.S.) federal government. A fact sheet is a summary, which is what we want, so we click through.

This page doesn’t mince words:

Based on extensive reviews of research studies, there is a strong scientific consensus of an association between alcohol drinking and several types of cancer (1, 2). In its Report on Carcinogens, the National Toxicology Program of the US Department of Health and Human Services lists consumption of alcoholic beverages as a known human carcinogen. The research evidence indicates that the more alcohol a person drinks—particularly the more alcohol a person drinks regularly over time—the higher his or her risk of developing an alcohol-associated cancer. Based on data from 2009, an estimated 3.5 percent of all cancer deaths in the United States (about 19,500 deaths) were alcohol related (3).

With the “.gov” extension, this page is pretty likely to be linked to the NIH. But just in case, we Google search the site to see who runs it and what their reputation is.

Since we’re reading laterally, let’s click on the link five results down to see what the NIH says about the National Cancer Institute. Again, we’re just sanity checking our impression that this is an authoritative body of the NIH. Here’s its blurb from the fifth result down:

The National Cancer Institute (NCI) is part of the National Institutes of Health (NIH), which is one of 11 agencies that compose the Department of Health and Human Services (HHS). The NCI, established under the National Cancer Institute Act of 1937, is the Federal Government’s principal agency for cancer research and training.

As always, we glance up to the web address and make sure we are really getting this information from the NIH. We are.

If we were a researcher, we would sort through more of this. We might review individual articles or make sure that some more out-of-the-mainstream views are not being ignored. Such an effort would take a deep background and understanding of the underlying issues. But we’re not researchers. We’re just people looking to find out if our rationalization for those two after-work drinks is maybe a bit bogus. And on that level, it’s not looking particularly good for us. We have a major review of the evidence in a major journal stating there’s really no safe level of drinking when it comes to cancer, and we have the NIH–one of the most trusted sources of health information in the U.S. (and not exactly a fad-chaser) telling us in an FAQ that there is a strong consensus that alcohol consumption predicts cancer.

25

One other thing to note here is that in the past chapter or two we followed a different pattern than a lot of web searching. Here we decided who would be the most trustworthy source of medical consensus (the NIH) and looked up what they said.

This is an important technique to have in your research mix. Too often, we execute web search after web search without first asking who would constitute an expert. Unsurprisingly, when we do things in this order, we end up valuing the expertise of people who agree with us and devaluing the expertise of those who don’t. If you find yourself going down a rabbit hole of conflicting information in your searches, back up a second and ask yourself: whose expertise would you respect? Maybe it’s not the NIH. Maybe it’s the Mayo Clinic, or Medline, or the World Health Organization. But deciding who has expertise before you search will mediate some of your worst tendencies toward confirmation bias.

So, given the evidence we’ve seen in previous chapters about alcohol and cancer–am I going to give up my after-work porter? I don’t know. I really like porter. The evidence is still emerging, and maybe the risk increase is worth it. But I’m also convinced the Washington Post article isn’t the newest version of “eating grapefruit will make you thinner.” It’s not even “Nutrasweet may make you fat,” which is an interesting finding, but a point around which there is no consensus. Instead “small amounts of daily alcohol increase cancer risk” represents a real emerging consensus in the research, and from our review we find it’s not even a particularly new trend. The consensus emerged some time ago (the NIH FAQ dates back to 2010); it’s just been poorly communicated to the public.

26

Evaluating news sources is one of the more contentious issues out there. People have their favorite news sources and don’t like to be told that their news source is untrustworthy.

For fact-checking, it’s helpful to draw a distinction between two activities:

Most newspaper articles are not lists of facts, which means that outfits like the Wall Street Journal and the New York Times do both news gathering and news analysis in stories. What has been lost in the dismissal of the New York Times as liberal and the Wall Street Journal as conservative is that these are primarily biases of the news analysis portion of what they do. To the extent the bias exists, it’s in what they choose to cover, to whom they choose to talk, and what they imply in the way they arrange those facts they collect.

The news gathering piece is affected by this, but in many ways largely separate, and the reputation for fact checking is largely separate as well. MSNBC, for example, has a liberal slant to its news, but a smart liberal would be more likely to trust a fact in the Wall Street Journal than a fact uttered on MSNBC because the Wall Street Journal has a reputation for fact-checking and accuracy that MSNBC does not. The same holds true for someone looking at the New York Observer vs. the New York Times. Even if you like the perspective of the Observer, if you were asked to bet on the accuracy of two pieces–one from the Observer and one from the Times–you could make a lot of money betting on the Times.

Narratives are a different matter. You may like the narrative of MSNBC or the Observer–or even find it more in line with reality. You might rely on them for insight. But if you are looking to validate a fact, the question you want to ask is not always “What is the bias of this publication?” but rather, “What is this publication’s record with concern to accuracy?”

27

Experts have looked extensively at what sorts of qualities in a news source tend to result in fair and accurate coverage. Sometimes, however, the number and complexity of the various qualities can be daunting. We suggest the following short list of things to consider.

Here’s an important tip: approach agenda last. It’s easy to see bias in people you disagree with, and hard to see bias in people you agree with. But bias isn’t agenda. Bias is about how people see things; agenda is about what the news source is set up to do. A site that clearly marks opinion columns as opinion, employs dozens of fact-checkers, hires professional reporters, and takes care to be transparent about sources, methods, and conflicts of interest is less likely to be driven by political agenda than a site that does not do these things. And this holds even if the reporters themselves may have personal bias. Good process and news culture goes a long way to mitigating personal bias.

Yet, you may see some level of these things and still have doubt. If the first three indicators don’t settle the question for you, you should consider agenda. Is the source connected to political party leadership? Funded by oil companies? Have the owners made comments about what they are trying to achieve with their publication, and are those ends about specific social or political change or about creating a more informed public?

Again, we cannot stress enough: you should read things by people with political agendas. It’s an important part of your news diet. It’s also the case that sometimes the people with the most expertise work for organizations that are trying to accomplish social or political goals. But when sourcing a fact or a statistic, agenda can get in the way and you’d want to find a less agenda-driven source if possible.

28

When it comes down to accuracy, there are a number of national newspapers in most countries that are well-staffed with reporters and have an editorial process that places a premium on accuracy. These papers are sometimes referred to as “newspapers of record.” We're aware that the origin of the term was originally a marketing plan to distinguish the New York Times from its rivals. At the same time, it captures an aspiration that is not common across many publications in a country. When I wrote code for Newsbank's Historical Paper Archive, we took the idea of Newspapers of Record seriously even on a local level. With the mess of paper startups and failures in the 1800s, understanding what was reliable was key. Which of that multitude of papers was likely to make the best go at covering all matters of local importance? “National newspapers of record” are distinguished in two ways:

The United States is considered by some to have at least four national newspapers of record:

You could add in the Boston Globe, Miami Herald, or Chicago Tribune. Or subtract the LA Times or Washington Post. These lists are meant to be starting points, indicating that a given publication has a greater reputation and reach than, say, the Clinton Daily Item.

Some other English-language newspapers of record:

Does that mean these papers are the arbiters of truth? Nope. Where there are disagreements between these papers and other reputable sources, it could be worth investigating.

As an example, in the run up to the Iraq War, the Knight Ridder news agency was in general a far more reliable news source on issues of faulty intelligence than the New York Times. In fact, reporting from the New York Times back then was particularly bad, and many have pointed to one reporter in particular, Judith Miller, who was far too credulous in repeating information fed to her by war hawks. Had you relied on just the New York Times for your information on these issues, you would have been misinformed.

There is much to be said about failings such as this, and it is certainly the case that high profile failings such as these have eroded faith in the press more generally, and, for some, created the impression that there really is no difference between the New York Times, the Springfield Herald, and your neighbor’s political Facebook page. This is, to say the least, overcompensation. We rely on major papers to tell us the truth, and rely on them to allocate resources to investigate and present that truth with an accuracy hard to match on a smaller budget. When they fail, as we saw with Iraq, horrible things can happen. But that is as much a testament to how much we rely on these publications to inform our discourse as it is a statement on their reliability.

A literate fact-checker does not take what is said in newspapers of record as truth. But, likewise, any person who doesn’t recognize the New York Times or Sydney Morning Herald as more than your average newspaper is going to be less than efficient at evaluating information. Learn to recognize the major newspapers in countries whose news you follow to assess information more quickly.

29

This guy has a pretty negative reaction to something published in a highly reputable journal. Is he an expert, or just a guy with opinions about things?

https://twitter.com/MichaelESmith/status/832603639260647425

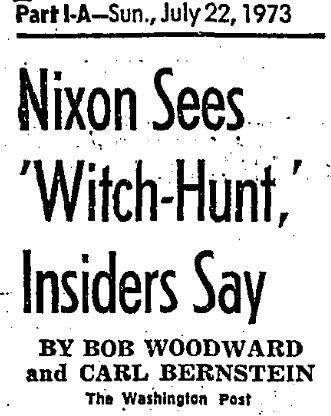

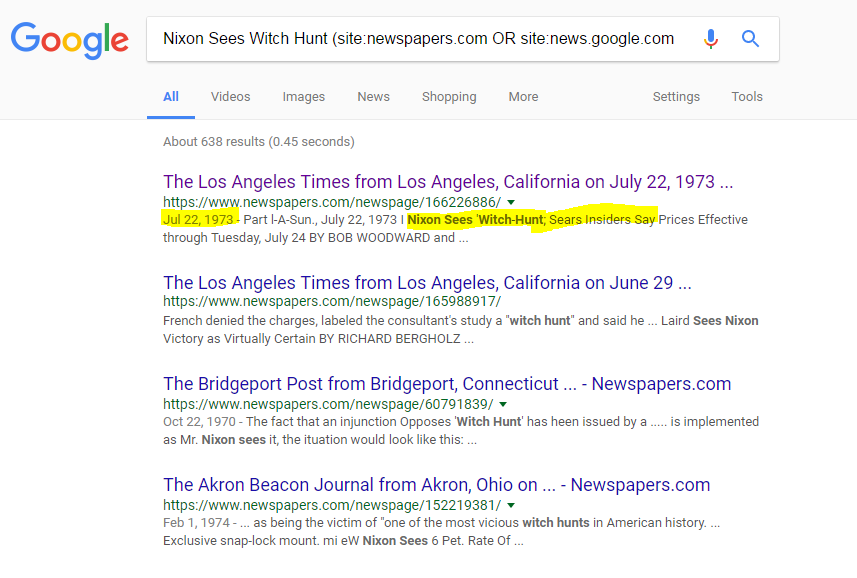

Are these the reporters who brought down Nixon? Is this a trustworthy reporter sharing this photo?

https://twitter.com/pixelatedboat/status/833302789665140740

30

Get together in small groups, and by both pooling group knowledge and doing research, develop a list of three authoritative books/websites for information on one of the following subjects:

Your sources should be:

Or, the sources should be:

When each group has finished their selection, trade your list of expert books/sites with another group and have that group critique the list.

Some questions for reflection:

V

31

One relatively common form of misinformation is the fake celebrity retweet. Sometimes this happens by accident–a person mistakenly retweets a parody account as real. Sometimes this happens by design, with an account faking a retweet. Here are some tips to make sure that the tweet you are looking at on Twitter is from the person you are attributing it to.

With Twitter, accounts are generally (although not always) run by a single person. However, unlike Facebook, Twitter does not enforce a “real name” policy, which makes it easy for one person to run multiple accounts, and to run accounts under different names. In fact, an important part of Twitter culture is the constellation of parody accounts, bots, and single issue accounts that amuse and inform Twitter subscribers.

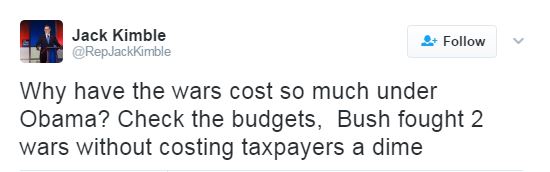

At the same time, it’s easy to get confused. As an example, consider the account of Representative Jack Kimble. Here’s a typical tweet:

If you’re a liberal, looking at this tweet may get your blood boiling. How can anyone possibly believe this? Especially a Representative?

Scanning the Twitter bio doesn’t help.

Here we see that he’s from the 54th District of California and he’s got a book out. Now if we’re reading carefully we might notice some fishy things here: his book, Profiles in Courageousness, seems like a parodic re-titling of Jack Kennedy’s Profiles in Courage. “E pluribus unum,” which means “From the many, one,” is translated to “1 nation under God”.

Oh, also: California only has 53 districts.

Unfortunately, you’ll likely be in such a huff about the comments that you won’t notice any of these things. So what is a general purpose indicator that you need to slow down? In most cases, it’s going to be the absence of a “verified account” marker.

As a counter-example to “Representative Kimble,” here’s a real representative, Jason Chaffetz, from Utah’s 3rd District.

That little blue seal with the check mark (the “verified badge”) indicates that this is a “verified identity” by Twitter—Twitter asserts that this person has proved they are who they say they are.

Who gets to get verified? It’s a bit unclear. Twitter puts it this way:

An account may be verified if it is determined to be an account of public interest. Typically this includes accounts maintained by users in music, acting, fashion, government, politics, religion, journalism, media, sports, business, and other key interest areas.

However, all members of Congress and senior administration officials qualify for such status. So do most major public figures and prominent writers. If you don’t see the blue badge, either disregard the tweet as suspicious, or do further research.

One additional note: sometimes people try to fake these indicators; an example is faking a verification symbol in a header.

This user has used their background image to place a verification badge next to their name. To steer clear of these sort of hacks, always view the badge in the sidebar or small “hover” card, not the header. To be extra sure it’s legit, hover your cursor over it– the words “verified account” should pop up.

This sounds complicated, but once you learn it, it takes maybe two seconds. Here I am, for example, checking to see if this is really New York Governor Andrew Cuomo’s Spotify playlist, or a fake account, using a quick hover technique:

In this case it’s verified. The governor should probably lay off Billy Joel a bit, but this is a legitimate tweet.

Not all celebrities have verified accounts. If you don’t find the verification badge, you may have to dig a little deeper.

There are a couple things to look for in an unverified account:

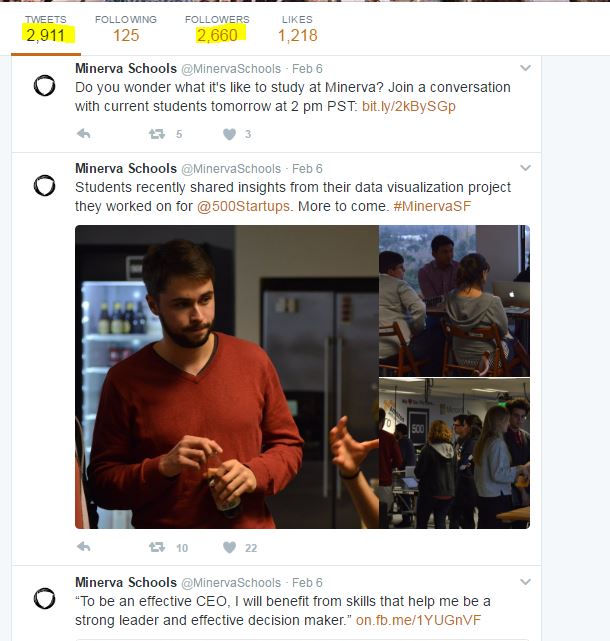

As an example, here is the Minerva Schools Twitter account. Minerva is a small, but high-profile school in California. The account is not verified. Is the account legitimate? Is it really Minerva?